Chapter 2 Introduction to spatial modeling

2.1 Introduction

In this section, a brief summary of continuous spatial processes will be provided, and the Stochastic Partial Differential Equations - SPDE approach -

as proposed in Lindgren, Rue, and Lindström (2011) will be summarized intuitively.

Provided that a Gaussian spatial process with Matérn covariance is a solution to SPDE’s

of the form presented in Lindgren, Rue, and Lindström (2011), we will give an

intuitive presentation of the main results of this link.

The Matérn covariance function is probably the most used one in geostatistics.

Therefore, the approach in Lindgren, Rue, and Lindström (2011) is useful

because the Finite Element Method (FEM) was used to build a GMRF

(Rue and Held 2005) representation and is available for practitioners

through the INLA package.

A recent review of spatial models in INLA can be found in Bakka et al. (2018).

2.1.1 Spatial variation

A point-referenced dataset is made up of any data measured at known locations. These locations may be in any coordinate reference system, most often longitude and latitude. Point-referenced data are common in many areas of science. This type of data appears in mining, climate modeling, ecology, agriculture and other fields. If the influence of location needs to be incorporated into a model, then a model for geo-referenced data is required.

As shown in Chapter 1, a regression model can be built using the coordinates of the data as covariates to describe the spatial variation of the variable of interest. In many cases, a non-linear function based on the coordinates will be necessary to adequately describe the effect of the location. For example, basis functions on the coordinates can be used as covariates in order to build a smooth function. This specification explicitly models the spatial trend on the mean.

Alternatively, it may be more natural to explicitly model the variation of the outcome considering that it may be similar at nearby locations. The first law of geography asserts: ``Everything is related to everything else, but near things are more related than distant things’’ (Tobler 1970). This means that the proposed model should have the property that an observation is more correlated with an observation close in space than with another observation that has been collected further away.

In spatial statistics it is common to formulate mixed-effects regression models in which the linear predictor is made of a trend plus a spatial variation (Haining 2003). The trend usually is composed of fixed effects or some smooth terms on covariates, while the spatial variation is usually modeled using correlated random effects. Spatial random effects often model (residual) small scale variation and this is the reason why these models can be regarded as models with correlated errors.

Models that account for spatial dependence may be defined according to whether locations are areas (cities or countries, for example) or points. For a review of models for different types of spatial data see, for example, Haining (2003) and Cressie and Wikle (2011).

The case of areal data (also known as lattice data) is often tackled by the use of generalized linear mixed-effects models given that variables are measured at a discrete number of locations. Given a vector of observations \(\mathbf{y} = (y_1, \ldots, y_n)\) from \(n\) areas, the model can be represented as

\[ y_i|\mu_i,\mathbf{\theta} \sim f(\mu_i; \mathbf{\theta}) \]

\[ g(\mu_i) = X_i \mathbf{\beta} + u_i;\ i = 1, \ldots, n \]

Here, \(f(\mu_i; \mathbf{\theta})\) is a distribution with mean \(\mu_i\) and hyperparameters \(\mathbf{\theta}\). The function \(g(\cdot)\) links the mean of each observation to a linear predictor on a vector of covariates \(X_i\) with coefficients \(\mathbf{\beta}\). The terms \(u_i\) represent random effects, distributed usually using a Gaussian multivariate distribution with zero mean and precision matrix \(\tau Q\).

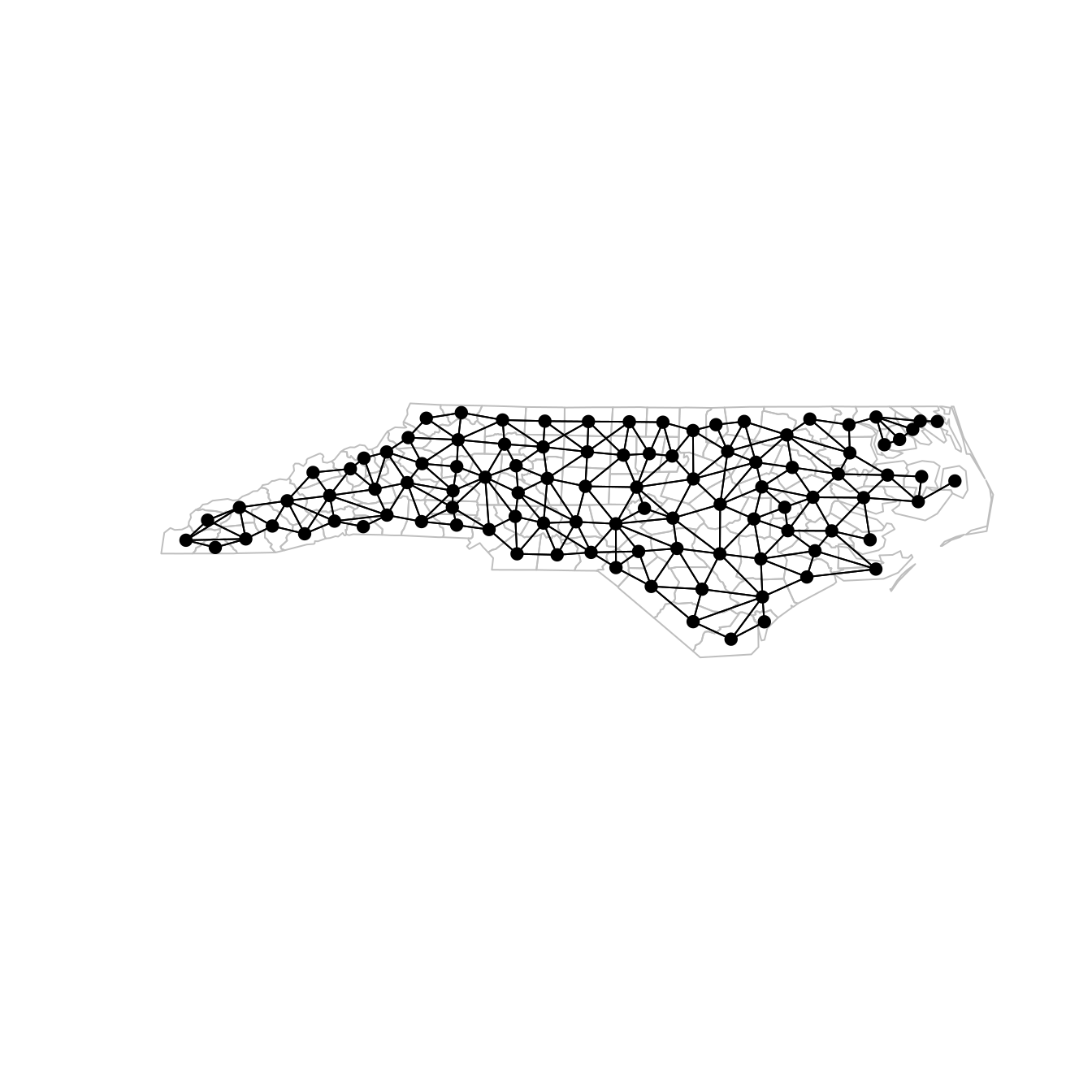

While \(\tau\) is a precision hyperparameter, the structure of the precision matrix is given by the matrix \(Q\) and is defined in a way that captures the spatial correlation in the data. For lattice data, \(Q\) is often based on the adjacency matrix that represents the neighborhood structure of the areas where data has been collected. This adjacency is illustrated in Figure 2.1, where the adjacency structure of the 100 counties in North Carolina is represented by a graph. This graph can be represented using a \(100\times 100\) matrix \(W\) with \(1\) at entry \((i, j)\) if counties \(i\) and \(j\) are neighbors and 0 otherwise.

Figure 2.1: Counties in North Carolina and their neighborhood structure.

A simple way to define spatially correlated random effects using the adjacency matrix \(W\) is to take \(Q = I - \rho W\), where \(I\) is the identity matrix and \(\rho\) is a spatial autocorrelation parameter. This is known as a CAR specification (Schabenberger and Gotway 2004). Nevertheless, there are other ways to exploit \(W\) to define \(Q\) to model spatial autocorrelation. See, for example, Haining (2003), Cressie and Wikle (2011) or Banerjee, Carlin, and Gelfand (2014).

Spatial data that is observed at specific locations is usually divided in two particular cases, depending on whether locations are fixed (geostatistics or point-referenced data) or random (point process). Note that in both cases the underlying spatial process will be defined at every point of the study region and not at a discrete number of locations (as in the case of lattice data). A general description of model-based geostatistics can be found in Diggle and Ribeiro Jr. (2007). Point pattern analysis is thoroughly described in Illian et al. (2008), Diggle (2013) and Baddeley, Rubak, and Turner (2015), for example.

Modeling and analyzing data observed at specific locations is the primary object of this book and these two cases will be considered in more detail. Next, Gaussian random fields to model continuous spatial processes will be introduced.

2.1.2 The Gaussian random field

To introduce some notation, let \(\mathbf{s}\) be any location in a study area \(\mathbf{D}\) and let \(U(\mathbf{s})\) be the random (spatial) effect at that location. \(U(\mathbf{s})\) is a stochastic process, with \(\mathbf{s}\in \mathbf{D}\), where \(\mathbf{D}\subset \mathbb{R}^d\). Suppose, for example, that \(\mathbf{D}\) is a country and data have been measured at geographical locations, over \(d=2\) dimensions within this country.

We denote by \(u(\textbf{s}_i)\), \(i=1,2,\ldots,n\) a realization of \(U(\mathbf{s})\) at \(n\) locations. It is commonly assumed that \(u(\mathbf{s})\) has a multivariate Gaussian distribution. If \(U(\mathbf{s})\) is assumed to be continuous over space, then it is a continuously-indexed Gaussian field (GF). This implies that it is possible to collect these data at any finite set of locations within the study region. To complete the specification of the distribution of \(u(\mathbf{s})\), it is necessary to define its mean and covariance.

A very simple option is to define a correlation function based only on the Euclidean distance between locations. This assumes that given two pairs of points separated by the same distance \(h\), they will have the same degree of correlation; Abrahamsen (1997) presents Gaussian random fields and correlation functions.

Now suppose that data \(y_i\) have been observed at locations \(\textbf{s}_i\), \(i=1,...,n\). If an underlying GF generated these data, the parameters of this process can be fitted by considering \(y(\textbf{s}_i) = u(\textbf{s}_i)\), where observation \(y(\textbf{s}_i)\) is assumed to be a realization of the GF at the location \(\textbf{s}_i\). If it is further assumed that \(y(\textbf{s}_i) = \mu + u(\textbf{s}_i)\), then there is one more parameter to estimate. It is worth mentioning that the distribution of \(u(\mathbf{s})\) at a finite number of points is considered a realization of a multivariate Gaussian distribution. In this case, the likelihood function is the multivariate Gaussian distribution with covariance \(\Sigma\).

In many situations it is assumed that there is an underlying GF that cannot be directly observed. Instead, observations are data with a measurement error \(e_i\), i.e.,

\[\begin{equation} \tag{2.1} y(\textbf{s}_i) = u(\textbf{s}_i) + e_i . \end{equation}\]

It is common to assume that \(e_i\) is independent of \(e_j\) for all \(i\neq j\) and \(e_i\) follows a Gaussian distribution with zero mean and variance \(\sigma_e^2\). This additional parameter, \(\sigma_e^2\), is also known as the “nugget effect” in geostatistics. The covariance of the marginal distribution of \(y(\mathbf{s})\) at a finite number of locations is \(\Sigma_y = \Sigma + \sigma^2_e\mathbf{I}\). This is a short extension of the basic GF model, and gives one additional parameter to estimate. For more details about this geostatistics model see, for example, Diggle and Ribeiro Jr. (2007).

The spatial process \(u(\textbf{s})\) if often assumed to be stationary and isotropic. A spatial process is stationary if its statistical properties are invariant under translation; i.e., they are the same at any point of the study region. Isotropy means that the process is invariant under rotation; i.e., their properties do not change regardless of the direction we move around the study region. Stationary and isotropic spatial process play an important role in spatial statistics, as they have desirable properties, such as a constant mean and the fact that the covariance between any two points only depends on their distance and not on their relative position. See, for example, Cressie and Wikle (2011), Section 2.2, for more details about these properties.

The usual way to evaluate the likelihood function, which is just a multivariate Gaussian density for the model in Equation (2.1), usually considers a Cholesky factorization of the covariance matrix (see, for example, Rue and Held 2005). Because this matrix is dense, this is an operation of order \(O(n^3)\), so this is a “big \(n\) problem”. Some software for geostatistical analysis uses an empirical variogram to fit the parameters of the correlation function. However, this option does not make any assumptions about a likelihood function for the data or uses a multivariate Gaussian distribution for the spatially structured random effect. A good description of these techniques is available in Cressie (1993).

To model spatial dependence with non-Gaussian data, it is usual to assume a likelihood for the data conditional on an unobserved random effect, which is a GF. Such spatial mixed effects models fall under the model-based geostatistics approach (Diggle and Ribeiro Jr. 2007). It is possible to describe the model in Equation (2.1) within a larger class of models: hierarchical models. Suppose that observations \(y_i\) have been obtained at locations \(\textbf{s}_i\), \(i=1,...,n\). The starting model is

\[\begin{equation} \tag{2.2} \begin{array}{rcl} y_i|\mathbf{\beta},u_i,\mathbf{F}_i,\phi & \sim & f(y_i|\mu_i,\phi) \\ \mathbf{u}|\mathbf{\theta} & \sim & GF(0, \Sigma) \end{array} \end{equation}\]

where \(\mu_i = h(\mathbf{F}_i^{T}\mathbf{\beta} + u_i)\). Here, \(\mathbf{F}_i\) is a matrix of covariates with associated coefficients \(\mathbf{\beta}\), \(\mathbf{u}\) is a vector of random effects and \(h(\cdot)\) is a function mapping the linear predictor \(\mathbf{F}_i^{T}\mathbf{\beta} + u_i\) to E\((y_i) = \mu_i\). In addition, \(\mathbf{\theta}\) are parameters for the random effect and \(\phi\) is a dispersion parameter of the distribution of the data \(f(\cdot)\), which is assumed to be in the exponential family.

To write the GF (with the Gaussian noise as a nugget effect), \(y_i\) is assumed to have a Gaussian distribution (with variance \(\sigma_e^2\)), \(\mathbf{F}_i^{T}\mathbf{\beta}\) is replaced by \(\beta_0\) and \(\mathbf{u}\) is modeled as a GF. Furthermore, it is possible to consider a multivariate Gaussian distribution for the random effect but it is seldom practical to use the covariance directly for model-based inference. This is shown in Equation (2.3).

\[\begin{equation} \tag{2.3} \begin{array}{rcl} y_i|\mu_i,\sigma_e &\sim& N(y_i|\mu_i,\sigma_e^2) \\ \mu_i &=&\beta_0 + u_i\\ \mathbf{u}|\theta &\sim& GF(0, \Sigma) \end{array} \end{equation}\]

As mentioned in Section 2.1.1, in the analysis of areal data there are models specified by conditional distributions that imply a joint distribution with a sparse precision matrix. These models are called Gaussian Markov random fields (GMRF) and a good reference is Rue and Held (2005). It is computationally easier to make Bayesian inference when a GMRF is used than when a GF is used, because the computational cost of working with a sparse precision matrix in GMRF models is (typically) \(O(n^{3/2})\) in \(\mathbb{R}^2\). This makes it easier to conduct analyses with big \(n\).

This basic hierarchical model can be extended in many ways, and some extensions will be considered later. When the general properties of the GF are known, all the practical models that contain, or are based on this random effect can be studied.

2.1.3 The Matérn covariance

A very popular correlation function is the Matérn correlation function. It has a scale parameter \(\kappa>0\) and a smoothness parameter \(\nu>0\). For two locations \(\textbf{s}_i\) and \(\textbf{s}_j\), the stationary and isotropic Matérn correlation function is:

\[ Cor_M(U(\textbf{s}_i), U(\textbf{s}_j)) = \frac{2^{1-\nu}}{\Gamma(\nu)} (\kappa \parallel \textbf{s}_i - \textbf{s}_j\parallel)^\nu K_\nu(\kappa \parallel \textbf{s}_i - \textbf{s}_j \parallel) \]

where \(\parallel . \parallel\) denotes the Euclidean distance and \(K_\nu\) is the modified Bessel function of the second kind. The Matérn covariance function is \(\sigma^2_u Cor_M(U(\textbf{s}_i), U(\textbf{s}_j))\), where \(\sigma^2_u\) is the marginal variance of the process.

If \(u(\mathbf{s})\) is a realization from \(U(\mathbf{s})\) at \(n\) locations, \(\textbf{s}_1, ..., \textbf{s}_n\), its joint covariance matrix can be easily defined as each entry of this joint covariance matrix \(\Sigma\) is \(\Sigma_{i,j} = \sigma_u^2 Cor_M(u(\textbf{s}_i), u(\textbf{s}_j))\). It is common to assume that \(U(.)\) has a zero mean. Hence, we have now completely defined a multivariate distribution for \(u(\mathbf{s})\).

To gain a better understanding about the Matérn correlation, samples can be drawn from the GF process. A sample \(\mathbf{u}\) is drawn considering \(\mathbf{u} = \mathbf{R}^\top \mathbf{z}\) where \(\mathbf{R}\) is the Cholesky decomposition of the covariance at \(n\) locations (see below); i.e., the covariance matrix is equal to \(\mathbf{R}^\top \mathbf{R}\) with \(\mathbf{R}\) an upper triangular matrix, and \(\mathbf{z}\) is a vector with \(n\) samples drawn from a standard Gaussian distribution. It implies that \(\textrm{E}(\mathbf{u}) = \textrm{E}(\mathbf{R^\top z}) = \mathbf{R}^\top \textrm{E}(\mathbf{z}) = \mathbf{0}\) and \(\textrm{Var}(\mathbf{u}) = \mathbf{R}^\top\textrm{Var}(\mathbf{z})\mathbf{R}=\mathbf{R}^\top\mathbf{R}\).

Two useful functions to sample from a GF are defined below:

# Matern correlation

cMatern <- function(h, nu, kappa) {

ifelse(h > 0, besselK(h * kappa, nu) * (h * kappa)^nu /

(gamma(nu) * 2^(nu - 1)), 1)

}

# Function to sample from zero mean multivariate normal

rmvnorm0 <- function(n, cov, R = NULL) {

if (is.null(R))

R <- chol(cov)

return(crossprod(R, matrix(rnorm(n * ncol(R)), ncol(R))))

}Function cMatern() computes the Matérn covariance of two points at distance

h, which requires additional parameters nu and kappa. Function

rmvnorm0() computes n samples from a multivariate Gaussian distribution

using the Cholesky decomposition, which needs the covariance matrix cov or

the upper triangles matrix R from a Cholesky decomposition.

Hence, given \(n\) locations, function cMatern() can be used to compute the

Matérn covariance, and function rmvnorm0() can be used to draw samples. These steps are

collected into the function book.rMatern, which is available in the file

spde-book-functions.R. This file is available from the website of this

book.

In order to simplify the visualization of the properties of continuous spatial processes with a Matérn covariance, a set of \(n=249\) locations in the one-dimensional space from 0 to \(25\) will be considered.

# Define locations and distance matrix

loc <- 0:249 / 25

mdist <- as.matrix(dist(loc))Four values for the smoothness parameter \(\nu\) will be considered. The values for the \(\kappa\) parameter were determined from the range expression \(\sqrt{8\nu}/\kappa\), which is the distance that gives correlation near \(0.14\). By combining the four values for \(\nu\) with two range values, there are eight parameter configurations.

The values of the different parameters \(\nu\), range and \(\kappa\) are created as follows:

# Define parameters

nu <- c(0.5, 1.5, 2.5, 5.5)

range <- c(1, 4) Next, the different combinations of the parameters are put together in a

matrix:

# Covariance parameter scenarios

params <- cbind(nu = rep(nu, length(range)),

range = rep(range, each = length(nu)))Sampled values depend on the covariance matrix and the noise considered for the

standard Gaussian distribution. In the following example, five vectors of size

\(n\) are drawn from the standard Gaussian distribution. These five standard

Gaussian observations, z in the code below, were the same across the eight

parameter configurations in order to keep track of what the different parameter

configurations are doing.

# Sample error

set.seed(123)

z <- matrix(rnorm(nrow(mdist) * 5), ncol = 5)Therefore, there is a set of \(40\) different realizations, five for each parameter configuration:

# Compute the correlated samples

# Scenarios (i.e., different set of parameters)

yy <- lapply(1:nrow(params), function(j) {

param <- c(params[j, 1], sqrt(8 * params[j, 1]) / params[j, 2],

params[j, 2])

v <- cMatern(mdist, param[1], param[2])

# fix the diagonal to avoid numerical issues

diag(v) <- 1 + 1e-9

# Parameter scenario and computed sample

return(list(params = param, y = crossprod(chol(v), z)))

})

Figure 2.2: Five samples from the one-dimensional Matérn correlation function for two different range values (each column of plots) and four different values for the smoothness parameter (each line of plots).

These samples are shown in the eight plots in Figure 2.2. One important point to observe here is the main spatial trend in the samples, as it seems not to depend on the smoothness parameter \(\nu\). In order to appreciate the main trend, consider one of the five samples (i.e., one of the colors). Then, compare the curves for different values of the smoothness parameter. If noise is added to the smoothest curve, and the resulting curve is compared to the other curves fitted using different smoothness parameters, it is difficult to disentangle what is due to noise from what is due to smoothness. Therefore, in practice, the smoothness parameter is usually fixed and a noise term is added.

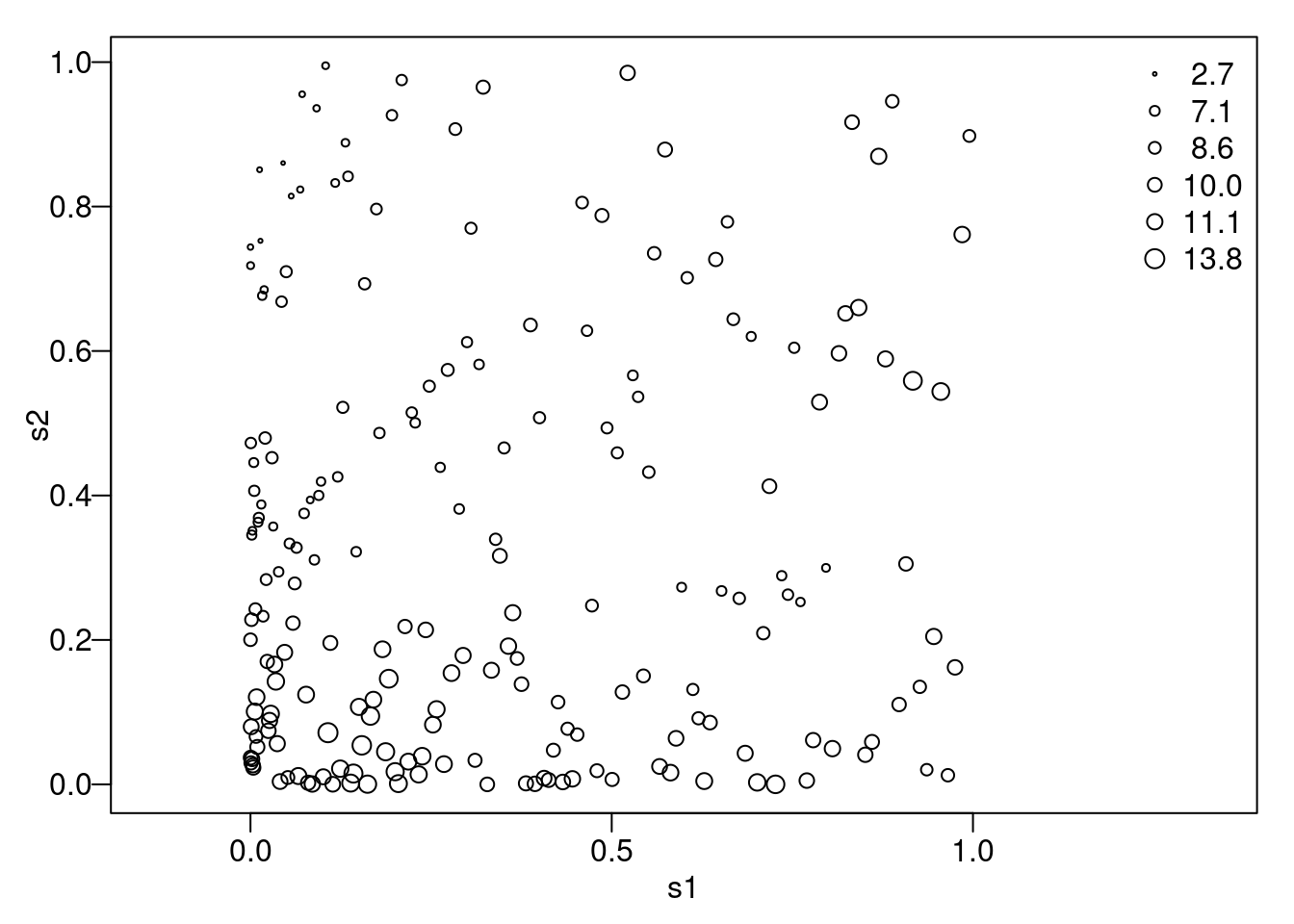

2.1.4 Simulation of a toy data set

Now, a sample from the model in Equation (2.1) will be drawn and it

will be used in Section 2.3. A set of \(n=100\) locations in

the unit square will be considered. This sample will have a higher density of

locations in the bottom left corner than in the top right corner. The R code

to do this is:

n <- 200

set.seed(123)

pts <- cbind(s1 = sample(1:n / n - 0.5 / n)^2,

s2 = sample(1:n / n - 0.5 / n)^2)To get a (lower triangular) matrix of distances, the dist() function

can be used as follows:

dmat <- as.matrix(dist(pts))The chosen parameters for the Matérn covariance are \(\sigma^2_u=5\), \(\kappa=7\) and \(\nu=1\). The mean is set to be \(\beta_0=10\) and the nugget parameter is \(\sigma^2_e=0.3\). These values are declared using:

beta0 <- 10

sigma2e <- 0.3

sigma2u <- 5

kappa <- 7

nu <- 1Now, a sample is obtained from a multivariate distribution

with constant mean equal to \(\beta_0\) and

covariance \(\sigma^2_e\mathbf{I} + \Sigma\), which is the

marginal covariance of the observations. \(\Sigma\) is the Matérn covariance

of the spatial process, mcor in the code below.

mcor <- cMatern(dmat, nu, kappa)

mcov <- sigma2e * diag(nrow(mcor)) + sigma2u * mcorNext, the sample is obtained by considering the Cholesky factor times a unit variance noise and add the mean:

R <- chol(mcov)

set.seed(234)

y1 <- beta0 + drop(crossprod(R, rnorm(n))) Figure 2.3 shows these simulated data in a graph of the locations where the size of the points is proportional to the simulated values.

Figure 2.3: The simulated toy example data.

These data will be used as a toy example in this tutorial. It is available in

the R-INLA package and can be loaded by typing:

data(SPDEtoy)2.2 The SPDE approach

Rue and Tjelmeland (2002) propose to approximate a continuous Gaussian field using

GMRFs, and demonstrated good fits for a range of commonly used

covariance functions. Although these “proof-of-concept” results were

interesting, their approach was less practical. All fits had to be

precomputed on a regular grid only. The real breakthrough, came with

Lindgren, Rue, and Lindström (2011), who considered a stochastic partial differential

equation (SPDE) whose solution is a GF with Matérn correlation.

Lindgren, Rue, and Lindström (2011) propose a new approach to represent a GF with Matérn

covariance, as a GMRF, by representing a solution of the SPDE using

the finite element method. This was possible only for some values of

the smoothness \(\nu\), where the continuous indexed random field was

Markov (Rozanov (1977)). The benefit is that the GMRF representation of

the GF, which can be computed explicitly, provides a sparse

representation of the spatial effect through a sparse precision

matrix. This enables the nice computational properties of the GMRFs

which can then be implemented in the INLA package.

Warning. In this section the main results in Lindgren, Rue, and Lindström (2011) are

summarized. If your purpose does not include understanding the

underlying methodology in depth, you can skip this section. If you

keep reading this section and find it difficult, do not be

discouraged. You will still be able to use INLA for applications even if

you have only a limited grasp of what is “under the hood”.

In this section we have tried to provide an intuitive approach to SPDE. However, if you are interested in the full details, they are in the Appendix of Lindgren, Rue, and Lindström (2011). In a few words, it uses the Finite Element Method (FEM, Ciarlet 1978; Brenner and Scott 2007; Quarteroni and Valli 2008) to provide a solution to a SPDE. They develop this solution by considering basis functions carefully chosen to preserve the sparse structure of the resulting precision matrix for the random field at a set of mesh nodes. This provides an explicit link between a continuous random field and a GMRF representation, which allows efficient computations.

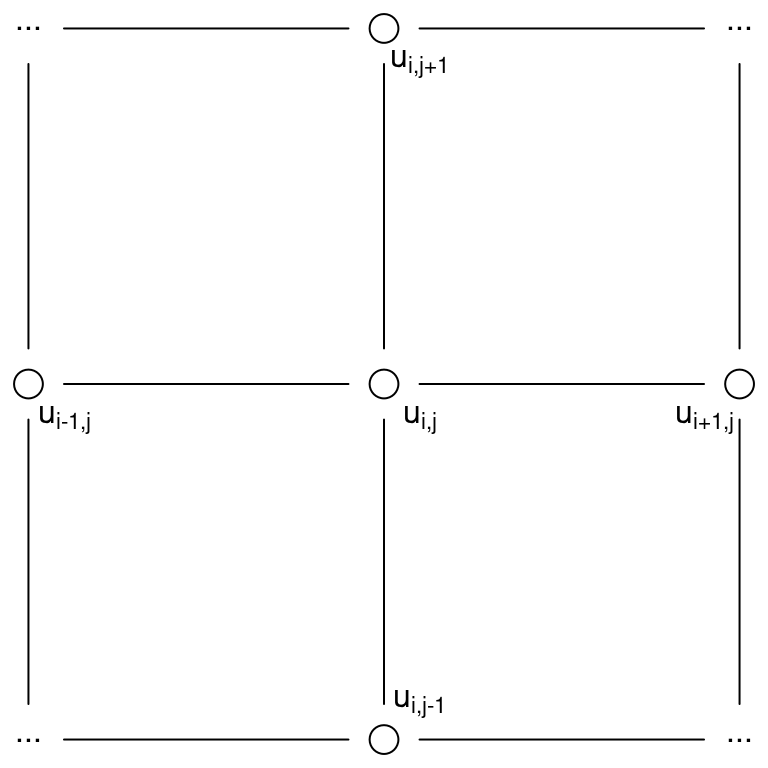

2.2.1 First result

The first main result provided in Lindgren, Rue, and Lindström (2011) is that a GF with a generalized covariance function, obtained when \(\nu > 0\) in the Matérn correlation function is a solution to a SPDE. This extends the result obtained by Besag (1981). A more statistical way to look at this result is by considering a regular two-dimensional lattice with number of sites tending to infinity. The representation of sites in a lattice can be seen in Figure 2.4.

Figure 2.4: Representation of sites in a two-dimensional lattice to estimate a spatial process.

In this case the full conditional distribution at the site \(i,j\) has expectation

\[ E(u_{i,j}|u_{-i,j}) = \frac{1}{a}(u_{i-1,j}+u_{i+1,j}+u_{i,j-1}+u_{i,j+1}) \]

and variance \(Var(u_{i,j}|u_{-i,j}) = 1/a\), with \(|a|>4\). In the representation using a precision matrix, for a single site, only the upper right quadrant is shown and with \(a\) as the central element, such that

\[\begin{equation} \tag{2.4} \begin{array}{|cc} -1 & \\ a & -1 \\ \hline \end{array} \end{equation}\]

A GF \(U(\mathbf{s})\) with Matérn covariance is a solution to the following linear fractional SPDE:

\[ (\kappa^2 - \Delta )^{\alpha/2}u(\mathbf{s}) = \mathbf{W}(\mathbf{s}),\;\;\; \mathbf{s} \in \mathbb{R}^d,\;\;\;\alpha=\nu+d/2,\;\;\kappa>0,\;\;\nu>0. \]

Here, \(\Delta\) is the Laplacian operator and \(\mathbf{W}(\mathbf{s})\) denotes a spatial white noise Gaussian stochastic process with unit variance.

Lindgren, Rue, and Lindström (2011) show that, for \(\nu=1\) and \(\nu=2\), the GMRF representation is a convolution of processes with precision matrix as in Equation (2.4). The resulting upper right quadrant precision matrix and center can be expressed as a convolution of the coefficients in Equation (2.4). For \(\nu=1\), using this representation, we have:

\[\begin{equation} \tag{2.5} \begin{array}{|ccc} 1 & & \\ -2a & 2 & \\ 4+a^2 & -2a & 1 \\ \hline \end{array} \end{equation}\]

and, for \(\nu=2\):

\[\begin{equation} \tag{2.6} \begin{array}{|cccc} -1 & & & \\ 3a & -3 & & \\ -3(a^2+3) & 6a & -3 & \\ a(a^2+12) & -3(a^2+3) & 3a & -1 \\ \hline \end{array} \end{equation}\]

An intuitive interpretation of this result is that as the smoothness parameter \(\nu\) increases, the precision matrix in the GMRF representation becomes less sparse. Greater density of the matrix is due to the fact that the conditional distributions depend on a wider neighborhood. Notice that it does not imply that the conditional mean is an average over a wider neighborhood.

Conceptually, this is like going from a first order random walk to a second order one. To understand this point, let us consider the precision matrix for the first order random walk, its square, and the precision matrix for the second order random walk.

q1 <- INLA:::inla.rw1(n = 5)

q1

## 5 x 5 sparse Matrix of class "dgTMatrix"

##

## [1,] 1 -1 . . .

## [2,] -1 2 -1 . .

## [3,] . -1 2 -1 .

## [4,] . . -1 2 -1

## [5,] . . . -1 1

# Same inner pattern as for RW2

crossprod(q1)

## 5 x 5 sparse Matrix of class "dsCMatrix"

##

## [1,] 2 -3 1 . .

## [2,] -3 6 -4 1 .

## [3,] 1 -4 6 -4 1

## [4,] . 1 -4 6 -3

## [5,] . . 1 -3 2

INLA:::inla.rw2(n = 5)

## 5 x 5 sparse Matrix of class "dgTMatrix"

##

## [1,] 1 -2 1 . .

## [2,] -2 5 -4 1 .

## [3,] 1 -4 6 -4 1

## [4,] . 1 -4 5 -2

## [5,] . . 1 -2 1As can be seen in the previous matrices, the differences between the precision matrix of a random walk of order 2 and the crossproduct of a precision matrix of a random walk of order 1 appear at the upper-left and bottom-right corners. The precision matrix for \(\alpha=2\), \(\mathbf{Q}_2=\mathbf{Q}_1\mathbf{C}^{-1}\mathbf{Q}_1\), is a standardized square of the precision matrix for \(\alpha=1\), \(\mathbf{Q}_1\). Matrices \(\mathbf{Q}_1\), \(\mathbf{C}\) and \(\mathbf{Q}_2\) are fully described later in Section 2.2.2.

2.2.2 Second result

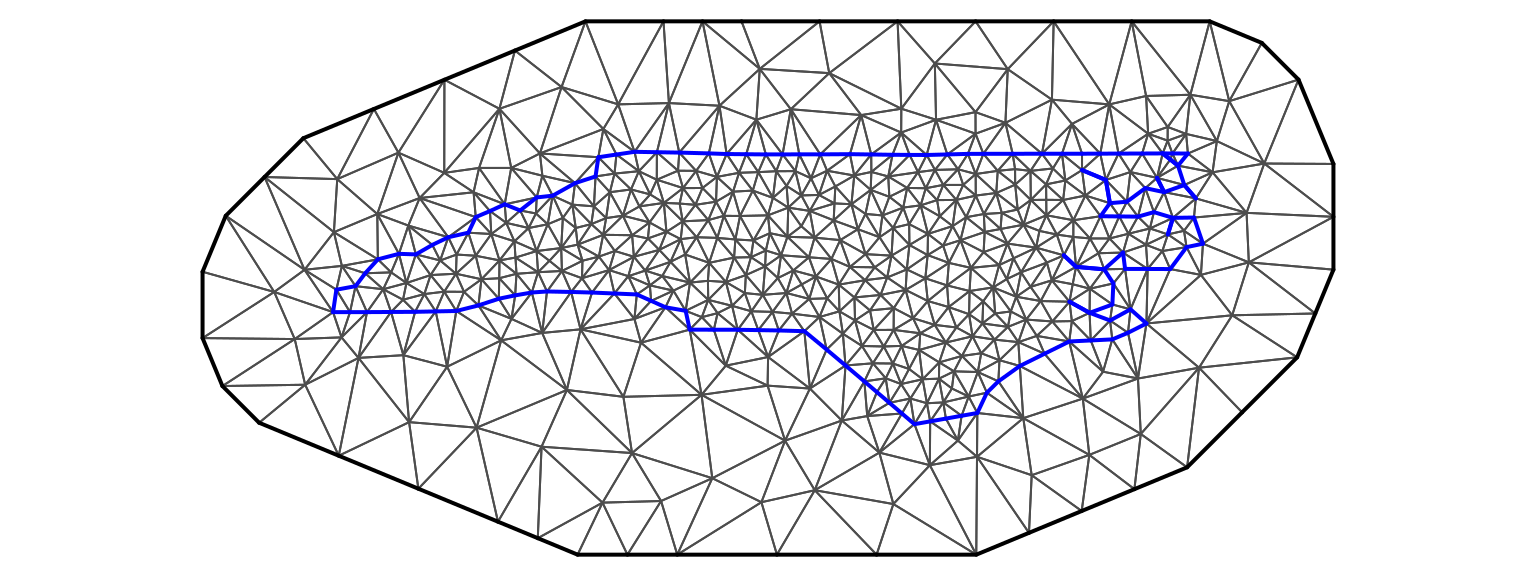

Point data are seldom located on a regular grid but distributed irregularly. Lindgren, Rue, and Lindström (2011) provide a second set of results that give a solution for the case of irregular grids. This was made considering the FEM, which is widely used in engineering and applied mathematics to solve differential equations.

The domain can be divided into a set of non-intersecting triangles, which may be irregular, where any two triangles meet in at most a common edge or corner. The three corners of a triangle are named vertices or nodes. The solution for the SPDE and its properties will depend on the basis functions used. Lindgren, Rue, and Lindström (2011) choose basis functions carefully in order to preserve the sparse structure of the resulting precision matrix.

The approximation is:

\[\begin{equation} \tag{2.7} u(\mathbf{s}) = \sum_{k=1}^{m}\psi_k(\mathbf{s})w_k \end{equation}\]

where \(\psi_k\) are basis functions and \(w_k\) are Gaussian distributed weights,

\(k=1,...,m\), with \(m\) the number of vertices in the triangulation. A

stochastic weak solution was considered to show that the joint distribution for

the weights determines the full distribution in the continuous domain.

More information about the weak solution and how to use the FEM in this case can be found in Bakka (2018).

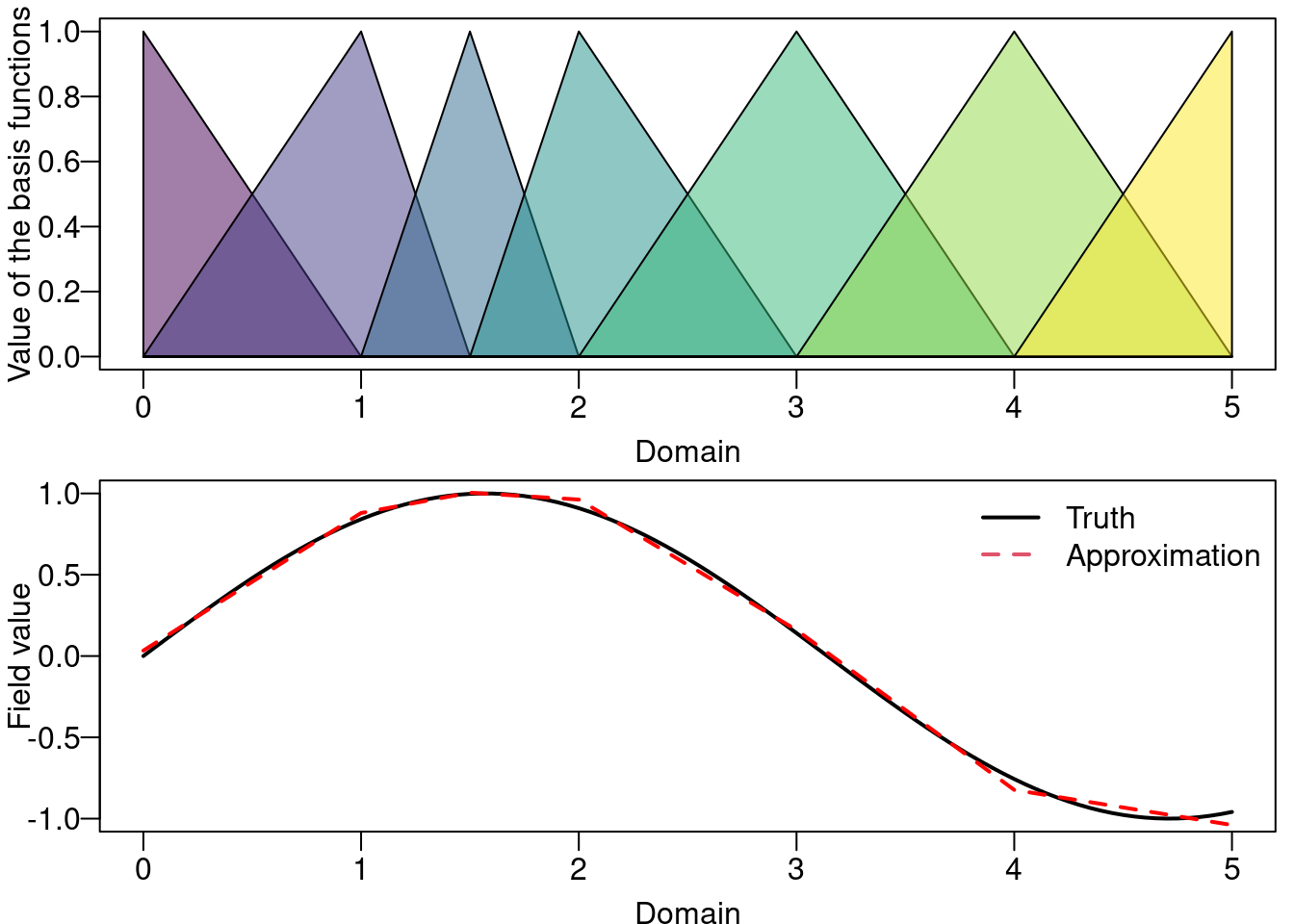

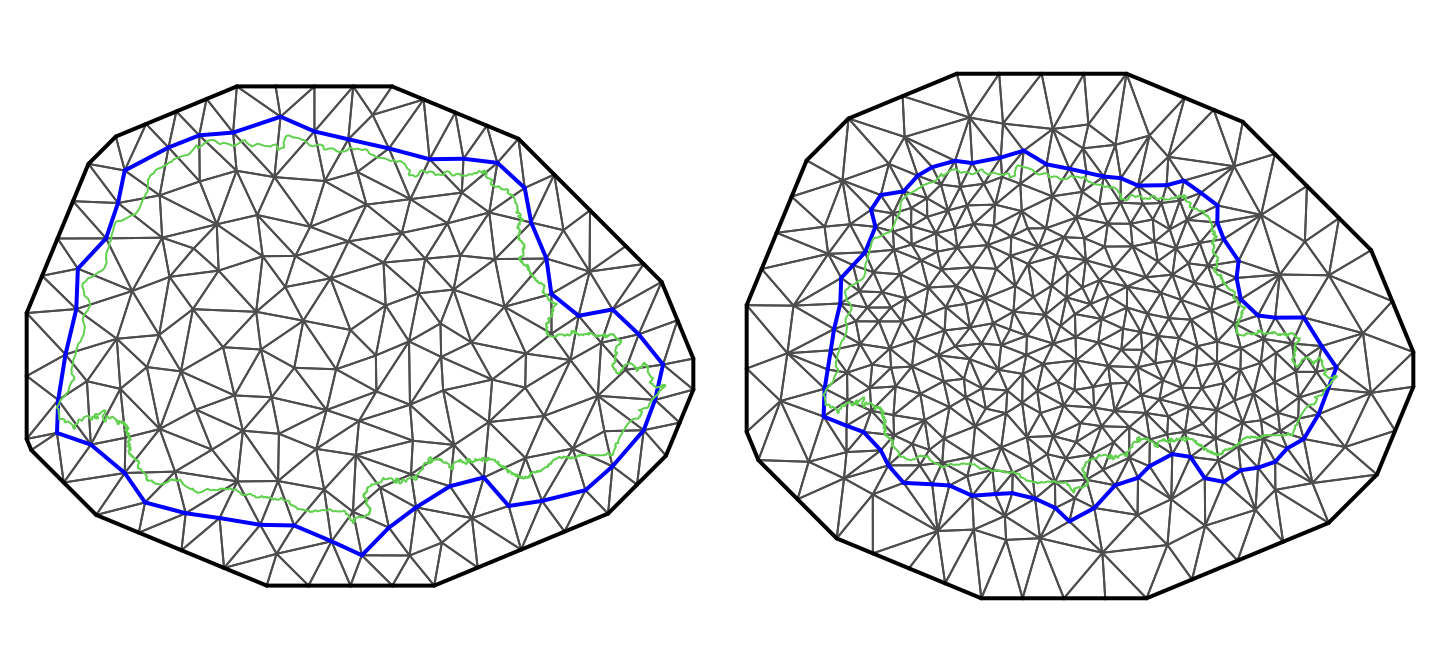

We will now illustrate how the process \(u(\mathbf{s})\) can be approximated to any point inside the triangulated domain. First, we consider the one-dimensional case in Figure 2.5. We have a set of six piece-wise linear basis functions shown at the top of this figure. The value of each of such basis functions is one at the basis knot, decreases linearly to zero until the center of the next basis function and is zero elsewhere. Thus for any point between two basis knots only two basis functions have a non-zero value. At the bottom of Figure 2.5, we have the sine function that was approximated using these basis functions. Note that we have used irregularly spaced knots here. Thus, the intervals between the knots do not need to be equally spaced (in a similar way as triangles in a mesh do not need to be equal). For example, it will be better to place more knots (or triangles in space) where the function changes more rapidly, as we did considering a knot at 1.5, shown in Figure 2.5. From this figure it is also clear that it would be better to have one knot around 4.5 as well.

Figure 2.5: One dimensional approximation illustration. The one dimensional piece-wise linear basis functions (top). A function and its approximation (bottom).

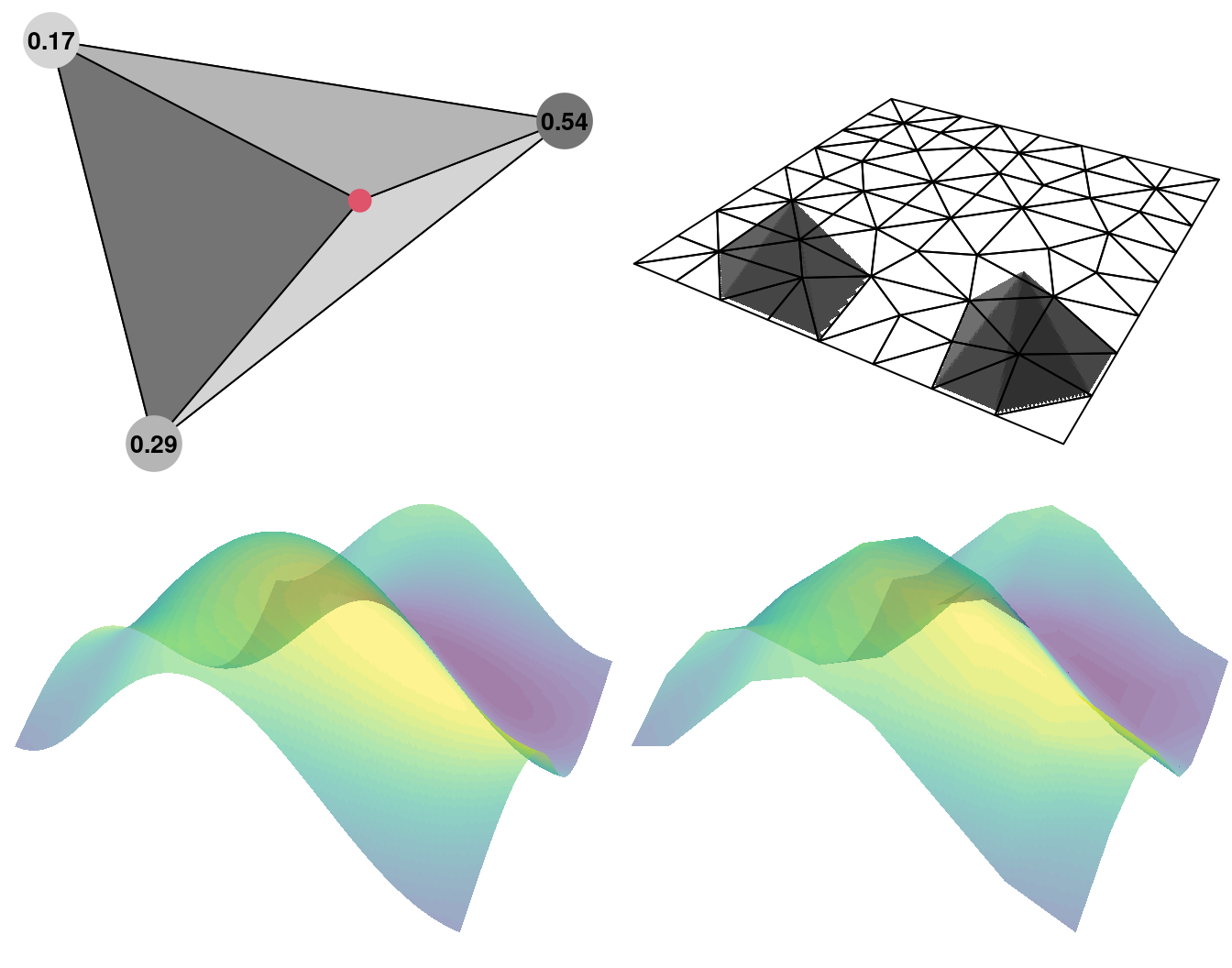

We now illustrate the approximation in two dimensional space considering piece-wise linear basis functions. These are based on triangles as a generalization of the idea in one dimension. Consider the big triangle shown on the top left of Figure 2.6, the point shown as a red dot inside this big triangle, and the three small triangles formed by this point and each vertex of the big one. The numbers at the vertex of the big triangle are equal to the area of the opposite triangle inside the big one (not formed by this vertex), divided by the area total of the big triangle. Thus, the three numbers sum up to one. These three numbers are the value of the basis function centered at the vertices of the big triangle being evaluated at the red point. These three values are considered for the approximation as they are the coefficients that multiply the function at each vertex of the big triangle.

Writing in matrix form, we have the projector matrix \(\mathbf{A}\) and when a point is inside a triangle, there are three non-zero values in the corresponding line of \(\mathbf{A}\). When the point is along an edge, there are two non-zero values and when the point is on top of a triangle vertex there is only one non-zero (which is equal to one). This is just an illustration of the barycentric coordinates of the point with respect to the coordinates of the triangle vertices known also as areal coordinates for this particular case.

Figure 2.6: Two dimensional approximation illustration. A triangle and the areal coordinates for the point in red (top left). All the triangles and the basis function for two of them (top right). A true field for illustration (bottom left) and its approximated version (bottom right).

We will now focus on the resulting precision matrix of the observations \(\mathbf{Q}_{\alpha,\kappa}\). It does consider the triangulation and the basis functions. The precision matrix matches the first result when applied to a regular grid. Consider the set of \(m\times m\) matrices \(\mathbf{C}\), \(\mathbf{G}\) and \(\mathbf{K}_{\kappa}\) with entries

\[ \mathbf{C}_{i,j} = \langle \psi_i, \psi_j\rangle, \;\;\;\;\; \mathbf{G}_{i,j} = \langle \nabla \psi_i, \nabla \psi_j \rangle, \;\;\;\;\; (\mathbf{K}_{\kappa})_{i,j} = \kappa^2 C_{i,j} + G_{i,j}\;. \]

Here, \(\langle \cdot, \cdot \rangle\) denotes the inner product and \(\nabla\) the gradient.

The precision matrix \(\mathbf{Q}_{\alpha,\kappa}\) as a function of \(\kappa^2\) and \(\alpha\) can be written as:

\[\begin{equation} \tag{2.8} \begin{array}{rcl} \mathbf{Q}_{1,\kappa} & = & \mathbf{K}_{\kappa} = \kappa^2\mathbf{C} + \mathbf{G}, \\ \mathbf{Q}_{2,\kappa} & = & \mathbf{K}_{\kappa}\mathbf{C}^{-1}\mathbf{K}_{\kappa} = \kappa^4\mathbf{C} + 2\kappa^2\mathbf{G} + \mathbf{G}\mathbf{C}^{-1}\mathbf{G} \\ \mathbf{Q}_{\alpha,\kappa} & = & \mathbf{K}_{\kappa}\mathbf{C}^{-1}Q_{\alpha-2,\kappa}\mathbf{C}^{-1}\mathbf{K}_{\kappa}, \;\;\;\mbox{for} \;\;\alpha = 3,4,...\;. \end{array} \end{equation}\]

Matrices \(\mathbf{C}\) and \(\mathbf{C}^{-1}\) can be replaced by \(\tilde{\mathbf{C}}\) and \(\tilde{\mathbf{C}}^{-1}\), respectively, with \(\tilde{\mathbf{C}}\) a diagonal matrix with diagonal elements

\[\begin{align} \tilde{\mathbf{C}}_{i,i} = \langle \psi_i, 1 \rangle, \end{align}\]

which is common when working with FEM. In this case, since \(\tilde{\mathbf{C}}\) is diagonal, \(\mathbf{K}_{\kappa}\) is as sparse as \(\mathbf{G}\).

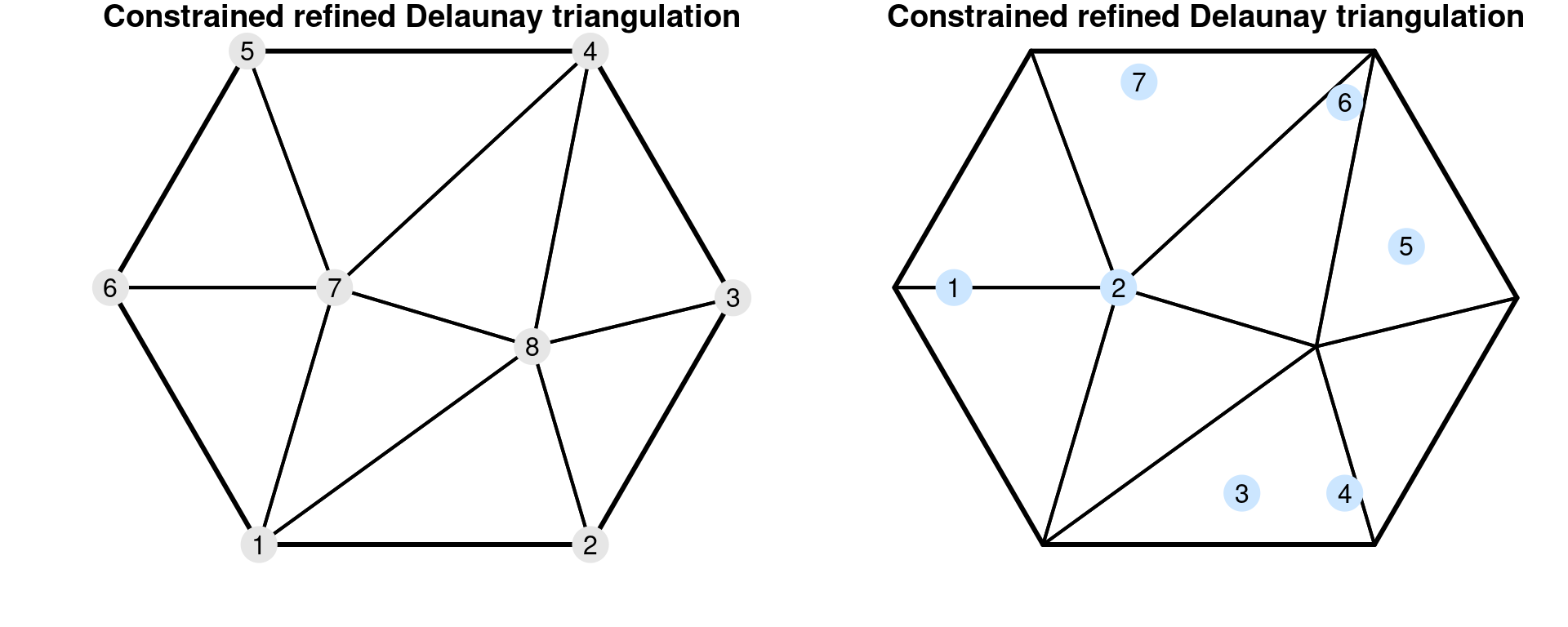

As an example, we consider a set of seven points and build a mesh around four of them with an adequate choice of the arguments in order to have a didactic illustration of the FEM. The dual mesh is also built. Finally, the code extracts matrices \(\mathbf{C}\), \(\mathbf{G}\) and \(\mathbf{A}\):

# This 's' factor will only change C, not G

s <- 3

pts <- cbind(c(0.1, 0.9, 1.5, 2, 2.3, 2, 1),

c(1, 1, 0, 0, 1.2, 1.9, 2)) * s

n <- nrow(pts)

mesh <- inla.mesh.2d(pts[-c(3, 5, 7), ], max.edge = s * 1.7,

offset = s / 4, cutoff = s / 2, n = 6)

m <- mesh$n

dmesh <- book.mesh.dual(mesh)

fem <- inla.mesh.fem(mesh, order = 1)

A <- inla.spde.make.A(mesh, pts)

Here, function book.mesh.dual() is used to create the dual mesh polygons

(see Figure 2.7). Furthermore, function inla.mesh.fem() is

used to create matrices \(\mathbf{C}\) and \(\mathbf{G}\), while function

inla.spde.make.A() creates the projector matrix \(\mathbf{A}\).

We can gain intuition about this result by considering the structure of each matrix, which is detailed in Appendix A.2 in Lindgren, Rue, and Lindström (2011). It may be easier to understand it by considering the plots in Figure 2.7. In this figure, a mesh with 8 nodes is shown in thicker border lines. The corresponding dual mesh forms a collection of polygons around each vertex of the mesh.

Figure 2.7: A mesh and its nodes numbered (top left) and the mesh with some points numbered (top right). The dual mesh polygons (mid left) and \(\mathbf{A}\) matrix (mid right). The associated \(\mathbf{C}\) matrix (bottom left) and \(\mathbf{G}\) matrix (bottom center).

The dual mesh is a set of polygons, one polygon for each vertex. Each polygon is formed by the mid of each of the edges that connects to the vertex, and the centroids of the triangles that the vertex is a corner of. Notice that for the vertices at the boundary of the mesh, this vertex is one point of the dual polygon as well. The area of each dual polygon is equal to \(\tilde{\mathbf{C}}_{ii}\).

Equivalently, \(\tilde{\mathbf{C}}_{ii}\) is equal to the sum of one third the area of each triangle that the vertex \(i\) is part of. Notice that each polygon around each mesh node is formed by one third of the triangles that it is part of. The \(\tilde{\mathbf{C}}\) matrix is diagonal.

Matrix \(\mathbf{G}\) reflects the connectivity of the mesh nodes. Nodes not connected by edges have the corresponding entry as zero. Values do not depend on the size of the triangles as they are scaled by the area of the triangles. For more detailed information, see Appendix 2 in Lindgren, Rue, and Lindström (2011).

As stated above, the resulting precision matrix for increasing \(\nu\) is a convolution of the precision matrix for \(\nu-1\) with a scaled \(\mathbf{K}_{\kappa}\). It still implies a denser precision matrix when working with \(\kappa\mathbf{C} + \mathbf{G}\).

The \(\mathbf{Q}\) precision matrix can be generalized to fractional values of \(\alpha\) (or \(\nu\)) using a Taylor approximation. See the author’s discussion response in Lindgren, Rue, and Lindström (2011). This approximation leads to a polynomial of order \(p = \lceil \alpha \rceil\) for the precision matrix:

\[\begin{equation} \tag{2.9} \mathbf{Q} = \sum_{i=0}^p b_i \mathbf{C}(\mathbf{C}^{-1}\mathbf{G})^i. \end{equation}\]

For \(\alpha=1\) and \(\alpha=2\), the precision matrix is the same as in Equation (2.8). For \(\alpha=1\), the values of the coefficients of the Taylor approximation are \(b_0=\kappa^2\) and \(b_1=1\). For the case \(\alpha=2\), the coefficients are \(b_0=\kappa^4\), \(b_1=\alpha\kappa^4\) and \(b_2=1\).

For fractional \(\alpha=1/2\), it holds that \(b_0=3\kappa/4\) and \(b_1=\kappa^{-1}3/8\). And for \(\alpha=3/2\) (and \(\nu=0.5\), the exponential case), the values are \(b_0=15\kappa^3/16\), \(b_1=15\kappa/8\) and \(b_2=15\kappa^{-1}/128\). Using these results combined with a recursive construction, for \(\alpha>2\), GMRF approximations can be obtained for all positive integers and half-integers.

2.3 A toy example

In this example we will fit a simple geostatistical model to the toy dataset

simulated in Section 2.1.4. This dataset is also available in the

INLA package and it can be loaded as follows:

data(SPDEtoy)This dataset is a three-column data.frame. Coordinates are in the first

two columns and the response in the third column.

str(SPDEtoy)

## 'data.frame': 200 obs. of 3 variables:

## $ s1: num 0.0827 0.6123 0.162 0.7526 0.851 ...

## $ s2: num 0.0564 0.9168 0.357 0.2576 0.1541 ...

## $ y : num 11.52 5.28 6.9 13.18 14.6 ...2.3.1 SPDE model definition

Given \(n\) observations \(y_i\), \(i=1,...,n\), at locations \(\mathbf{s}_i\), the following model can be defined:

\[ \begin{array}{rcl} \mathbf{y}|\beta_0,\mathbf{u},\sigma_e^2 & \sim & N(\beta_0 + \mathbf{A} \mathbf{u}, \sigma_e^2) \\ \mathbf{u} & \sim & GF(0, \Sigma) \end{array} \]

where \(\beta_0\) is the intercept, \(\mathbf{A}\) is the projector matrix and \(\mathbf{u}\) is a spatial Gaussian random field. Note that the projector matrix \(\mathbf{A}\) links the spatial Gaussian random field (defined using the mesh nodes) to the locations of the observed data.

This mesh must cover the entire spatial domain of interest. More details on

mesh building are given in Section 2.6. Here, the

fifth mesh built in Section 2.6.3 will be used. In the

following R code, a domain is first defined to create the mesh:

pl.dom <- cbind(c(0, 1, 1, 0.7, 0), c(0, 0, 0.7, 1, 1))

mesh5 <- inla.mesh.2d(loc.domain = pl.dom, max.e = c(0.092, 0.2))The SPDE model in the original parameterization can be built

using function inla.spde2.matern().

The main arguments of this function

are the mesh object (mesh) and the \(\alpha\) parameter

(alpha), which is related to the smoothness parameter of the process. The

parameterization is flexible and can be defined by the user, with a default

choice controlling the log of \(\tau\) and \(\kappa\), that jointly control the

variance and correlation range.

Instead of using the default parameterization, it is more intuitive to control

the parameters through the marginal standard deviation and the range,

\(\sqrt{8\nu}/\kappa\). For details on this parameterization see Lindgren (2012).

In addition, when defining the SPDE model a set of priors for both parameters is

also required. The inla.spde2.pcmatern() uses this parameterization to set

the Penalized Complexity prior, PC-prior, as derived in Fuglstad et al. (2018).

The Penalized Complexity prior is under the framework reviewed in Section

1.6.5.

The PC-prior was derived for the practical range, or just range,

which is the distance such that the correlation is around \(0.139\),

and the marginal standard deviation of the field, \(\sigma\).

The way of setting these priors for \(\sigma\) is that we do need to

set \(\sigma_0\) and \(p\) such that P\((\sigma>\sigma_0)=p\).

In our example we will set \(\sigma_0=10\) and \(p=0.01\) and

these values are passed to the inla.spde2.matern() function

as a vector in the next code.

For the practical range parameter the setting is that we have to

choose \(r_0\) and \(p\) such that P\((r<r_0)=p\).

We have to account for the fact that in our example the domain

is the \([0,1]\times [0,1]\) square.

We can set the PC-prior for the median by setting \(p=0.5\)

and in the next code we consider a prior median equal to \(0.3\).

The smoothness parameter \(\nu\) is fixed as \(\alpha=\nu+d/2\in [1,2]\).

Next, we build the SPDE model considering that the toy data was simulated

with \(\alpha=2\), which is actually the default value in the

inla.spde2.pcmatern() function:

spde5 <- inla.spde2.pcmatern(

# Mesh and smoothness parameter

mesh = mesh5, alpha = 2,

# P(practic.range < 0.3) = 0.5

prior.range = c(0.3, 0.5),

# P(sigma > 1) = 0.01

prior.sigma = c(10, 0.01)) 2.3.2 Projector matrix

The second step when setting an SPDE model is to build a projector matrix. The

projector matrix contains the basis function value for each basis, with one

basis function at each column. It will be used to interpolate the random field

that is being modeled at the mesh nodes. For details, see Section

2.2.2. The projector matrix can be built with the

inla.spde.make.A() function. Considering the basis function at each mesh

vertex, the basis function value for one point within one triangle is computed

as illustrated in Figure 2.6. Thus, the value for the random

field is the projection of a plane (formed by the random field value at these

three mesh points) to this point location. This is why we call a projector matrix

to the matrix that stores in each line a different basis function evaluated at

a location point. This matrix is sparse since no more than three elements in

each line are non-zero. Also, the sum of each row is equal to one.

Using the toy dataset and example mesh mesh5, the projector matrix can be

computed as follows:

coords <- as.matrix(SPDEtoy[, 1:2])

A5 <- inla.spde.make.A(mesh5, loc = coords)This matrix has dimension equal to the number of data locations times the number of vertices in the mesh:

dim(A5)

## [1] 200 490Because each point location is inside one of the triangles there are exactly three non-zero elements on each line:

table(rowSums(A5 > 0))

##

## 3

## 200Furthermore, these three elements on each line sum up to one:

table(rowSums(A5))

##

## 1

## 200The reason why they sum up to one is that each matrix element is a basis function evaluated at a point location, and the basis functions sum up to one at each location. Multiplication of this matrix by a vector representing a continuous function evaluated at the locations gives the interpolation of this function at the point locations.

There are some columns in the projector matrix all of whose elements equal zero:

table(colSums(A5) > 0)

##

## FALSE TRUE

## 242 248These columns correspond to triangles with no point location inside. These

columns can be dropped. The inla.stack() function (Section 2.3.3)

does this automatically.

When there is a mesh where every point location is at a mesh vertex, each line

on the projector matrix has exactly one non-zero element. This is the case for

the mesh1 built in Section 2.6.3:

A1 <- inla.spde.make.A(mesh1, loc = coords)In this case, all the non-zero elements in the projector matrix are equal to one:

table(as.numeric(A1))

##

## 0 1

## 579800 200Each element is equal to one in this case because the location points are actually one of the mesh nodes and thus the weight of the basis function at one mesh node is equal to one.

2.3.3 The data stack

The inla.stack() function is useful for organizing data, covariates, indices

and projector matrices, all of which are important when constructing an SPDE

model. inla.stack() helps to control the way effects are projected in the

linear predictor. Detailed examples including one dimensional, replicated

random field and space-time models are presented in Lindgren (2012) and

Lindgren and Rue (2015), which also details the mathematical theory for the stack

method.

In the toy example, there is a linear predictor that can be written as

\[ \mathbf{\eta}^{*} = \mathbf{1}\beta_0 + \mathbf{A}\mathbf{u} \;. \]

The first term on the right-hand side represents the intercept, while the second represents the spatial effect. Each term is represented as a product of a projector matrix and an effect.

The solution obtained with the Finite Element Method considered when

implementing the SPDE models builds the model on the mesh nodes. Usually, the

number of nodes is not equal to the number of locations for which we have

observations. The inla.stack() function allows us to work with predictors

that includes terms with different dimensions. The three main inla.stack()

arguments are a vector list with the data (data), a list of projector

matrices (each related to one block effect, A) and the list of effects

(effects). Optionally, a label can be assigned to the data stack (using

argument tag).

Two projector matrices are needed: the projector matrix for the latent field and a matrix that is a one-to-one map of the covariate and the response. The latter matrix can simply be a constant rather than a diagonal matrix.

The following R code will take the toy example data and use function

inla.stack() to put all these three elements (i.e., data, projector matrices

and effects) together:

stk5 <- inla.stack(

data = list(resp = SPDEtoy$y),

A = list(A5, 1),

effects = list(i = 1:spde5$n.spde,

beta0 = rep(1, nrow(SPDEtoy))),

tag = 'est')The inla.stack() function automatically eliminates any column in a projector

matrix that has a zero sum, and it generates a new and simplified matrix. The

function inla.stack.A() extracts a simplified matrix to use as an observation

predictor matrix with the inla() function, while the inla.stack.data()

function extracts the corresponding data.

The simplified projector matrix from the stack consists of the simplified projector matrices, where each column holds one effect block. Hence, its dimensions are:

dim(inla.stack.A(stk5))

## [1] 200 249In the toy example, there is one column more than the number of columns with non-zero elements in the projector matrix. This extra column is due to the intercept and all values are equal to one.

2.3.4 Model fitting and some results

To fit the model, the intercept in the formula must be removed and added as a

covariate term in the list of effects, so that all the covariate terms in the

formula can be included in a projector matrix. Then, the matrix of predictors

is passed to the inla() function in its control.predictor

argument, as follows:

res5 <- inla(resp ~ 0 + beta0 + f(i, model = spde5),

data = inla.stack.data(stk5),

control.predictor = list(A = inla.stack.A(stk5)))The inla() function returns an object that is a set of several results. It

includes summaries, marginal posterior densities of each parameter in the

model, the regression parameters, each element that is a latent field, and all

the hyperparameters.

The summary of the intercept \(\beta_0\) is obtained with the following R code:

res5$summary.fixed

## mean sd 0.025quant 0.5quant 0.975quant mode

## beta0 9.525 0.6932 8.073 9.539 10.89 9.559

## kld

## beta0 6.141e-10Similarly, the summary of the precision of the Gaussian likelihood, i.e., \(1/\sigma_e^2\), can be obtained as follows:

res5$summary.hyperpar[1, ]

## mean sd

## Precision for the Gaussian observations 2.744 0.4268

## 0.025quant 0.5quant

## Precision for the Gaussian observations 1.997 2.713

## 0.975quant mode

## Precision for the Gaussian observations 3.673 2.654A marginal distribution in the inla() output consists of two vectors. One is

a set of values on the range of the parameter space with posterior marginal

density bigger than zero and another is the posterior marginal density at each

one of these values. Any posterior marginal can be transformed. For example,

if the posterior marginal for \(\sigma_e\) is required, the square root of \(\sigma_e^2\),

it can be obtained as follows:

post.se <- inla.tmarginal(function(x) sqrt(1 / exp(x)),

res5$internal.marginals.hyperpar[[1]])Now, it is possible to obtain summary statistics from this marginal distribution:

inla.emarginal(function(x) x, post.se)

## [1] 0.6091

inla.qmarginal(c(0.025, 0.5, 0.975), post.se)

## [1] 0.5224 0.6070 0.7067

inla.hpdmarginal(0.95, post.se)

## low high

## level:0.95 0.5189 0.7026

inla.pmarginal(c(0.5, 0.7), post.se)

## [1] 0.005309 0.966850In the previous code, function inla.emarginal() is used to compute the

posterior expectation of a function on the parameter, function

inla.qmarginal() computes quantiles from the posterior marginal, function

inla.hpdmarginal() computes a highest posterior density (HPD) interval and

function inla.pmarginal() can be used to obtain posterior probabilities.

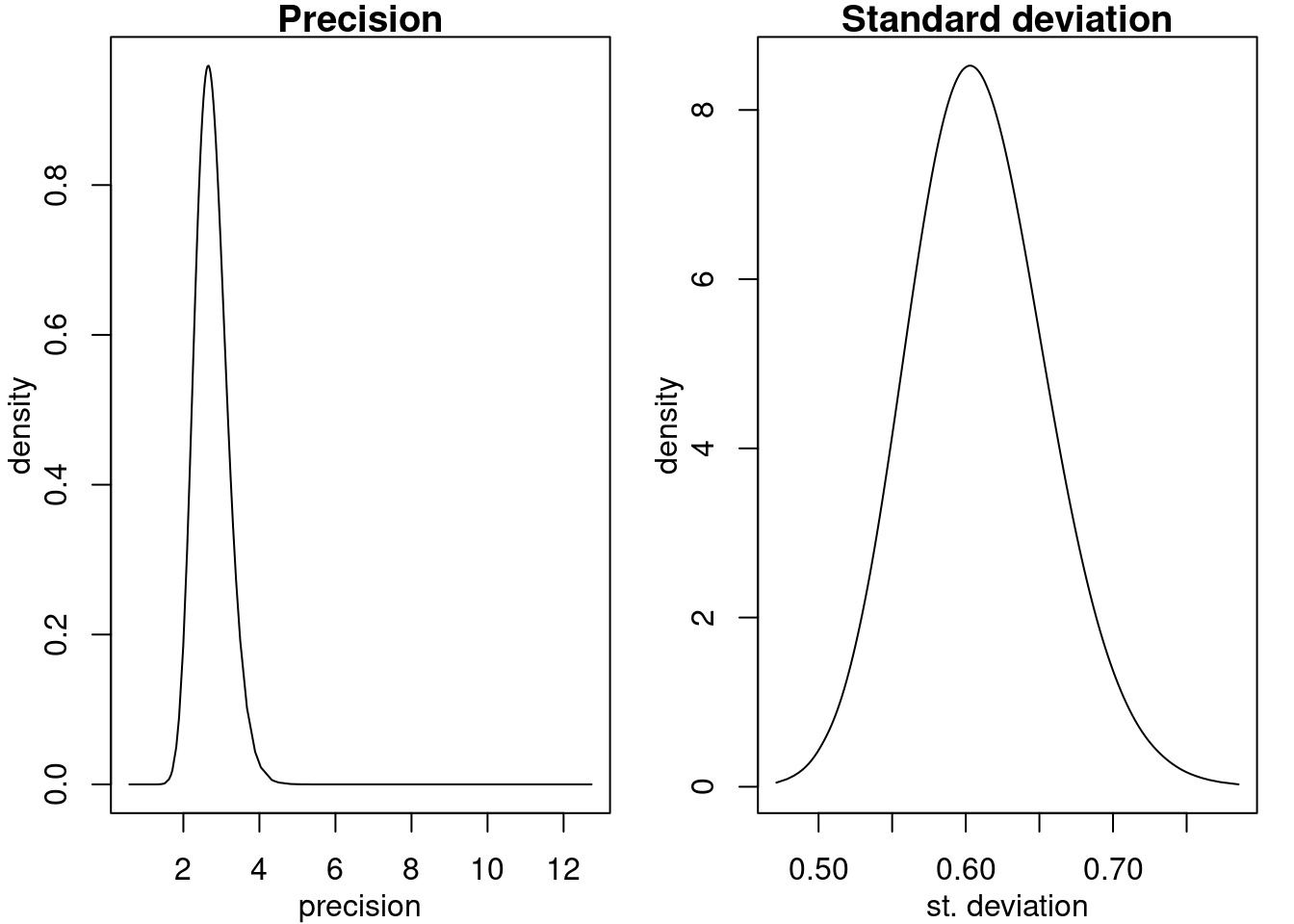

Figure 2.8 shows the posterior marginals of the precision and the

standard deviation, which has been created with the following code:

par(mfrow = c(1, 2), mar = c(3, 3, 1, 1), mgp = c(2, 1, 0))

plot(res5$marginals.hyperpar[[1]], type = "l", xlab = "precision",

ylab = "density", main = "Precision")

plot(post.se, type = "l", xlab = "st. deviation",

ylab = "density", main = "Standard deviation")

Figure 2.8: Posterior marginals of the precision (top) and the standard deviation (bottom).

In cases with weakly identifiable parameters, transforming the posterior marginal densities leads to a loss of accuracy. To reduce that loss, one can operate more directly on the marginal densities without first transforming:

post.orig <- res5$marginals.hyperpar[[1]]

fun <- function(x) rev(sqrt(1 / x)) # Use rev() to preserve order

ifun <- function(x) rev(1 / x^2)

inla.emarginal(fun, post.orig)

## [1] 0.5466

fun(inla.qmarginal(c(0.025, 0.5, 0.975), post.orig))

## [1] 0.5223 0.6072 0.7074

fun(inla.hpdmarginal(0.95, post.orig))

## [1] 0.5274 0.7169

inla.pmarginal(ifun(c(0.5, 0.7)), post.orig)

## [1] 0.03403 0.99427Note that the HPD interval is not invariant to changes of variables, and if a specific interpretation is required one therefore has to start by transforming the density to that parameterization.

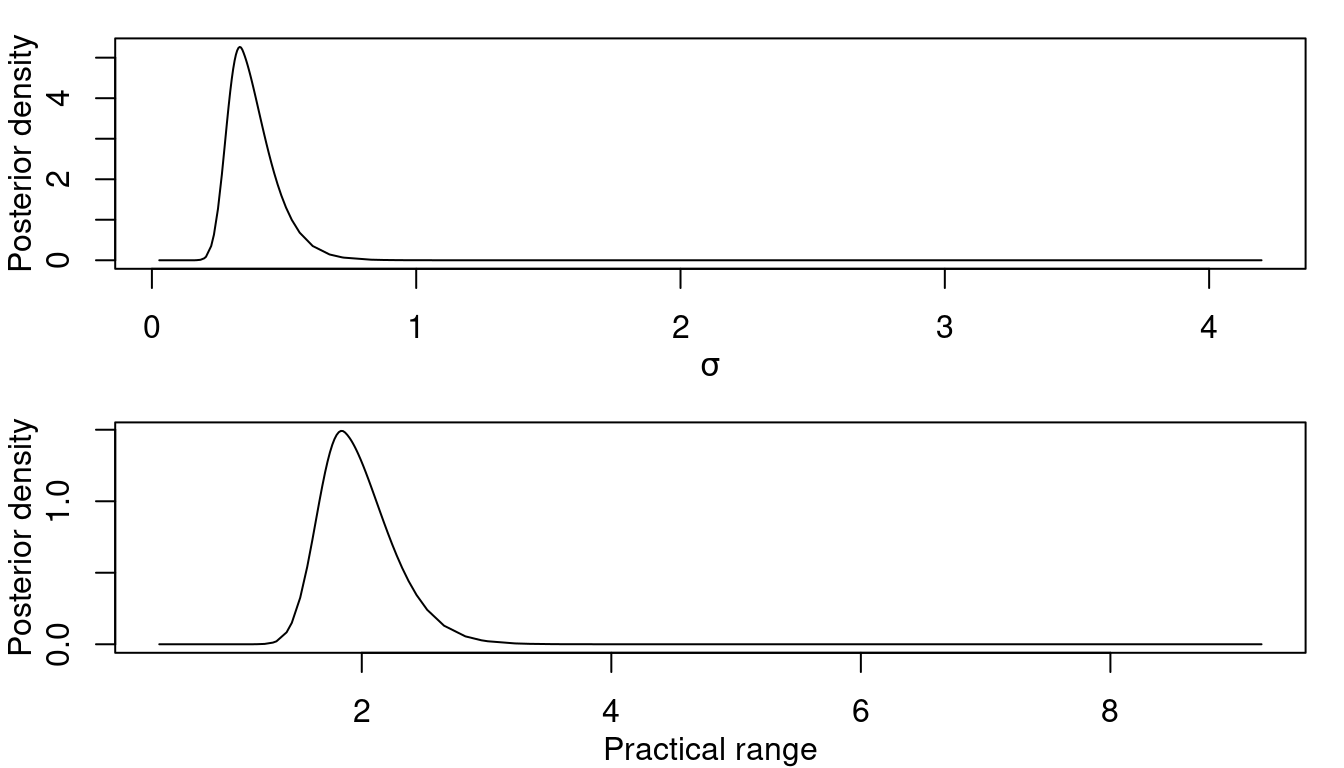

The posterior marginals for the parameters of the latent field are visualized in Figure 2.9 with:

par(mfrow = c(2, 1), mar = c(3, 3, 1, 1), mgp = c(2, 1, 0))

plot(res5$marginals.hyperpar[[2]], type = "l",

xlab = expression(sigma), ylab = 'Posterior density')

plot(res5$marginals.hyperpar[[3]], type = "l",

xlab = 'Practical range', ylab = 'Posterior density')

Figure 2.9: Posterior marginal distribution for \(\sigma\) (left) and the practical range (right).

Furthermore, summary statistics and HPD intervals can also be computed, and these marginals can be plotted as well to visualize them.

2.4 Projection of the random field

A common objective when dealing with spatial data collected at some locations is the prediction on a fine grid of the spatial model to get high resolution maps. In this section we show how to do this prediction for the random field term only. In Section 2.5, prediction of the outcome will be described. In this example, the random field will be predicted at three target locations: (0.1, 0.1), (0.5, 0.55), (0.7, 0.9). These points are defined in the following code:

pts3 <- rbind(c(0.1, 0.1), c(0.5, 0.55), c(0.7, 0.9))The predictor matrix for the target locations is:

A5pts3 <- inla.spde.make.A(mesh5, loc = pts3)

dim(A5pts3)

## [1] 3 490In order to visualize only the columns with non-zero elements of this matrix, the following code can be run:

jj3 <- which(colSums(A5pts3) > 0)

A5pts3[, jj3]

## 3 x 9 sparse Matrix of class "dgCMatrix"

##

## [1,] . . 0.094 . . . 0.51 0.39 .

## [2,] 0.22 . . 0.32 . 0.46 . . .

## [3,] . 0.12 . . 0.27 . . . 0.61This projector matrix can then be used for interpolating a functional of the random field, for example, the posterior mean. This interpolation is a computationally very cheap operation, since it is a matrix vector product where the matrix is sparse, having only up to three non-zero elements in each row.

drop(A5pts3 %*% res5$summary.random$i$mean)

## [1] 0.1489 3.0358 -2.76862.4.1 Projection on a grid

By default, the inla.mesh.projector() function computes a projector matrix

automatically for a grid of points over a square that contains the mesh. It

can be used to get the map of the random field on a fine grid. To get a

projector matrix on a grid in the domain \([0, 1]\times [0, 1]\) these limits can

be passed to the inla.mesh.projector() function:

pgrid0 <- inla.mesh.projector(mesh5, xlim = 0:1, ylim = 0:1,

dims = c(101, 101))Then, the projection of the posterior mean and the posterior standard deviation can be obtained with the following code:

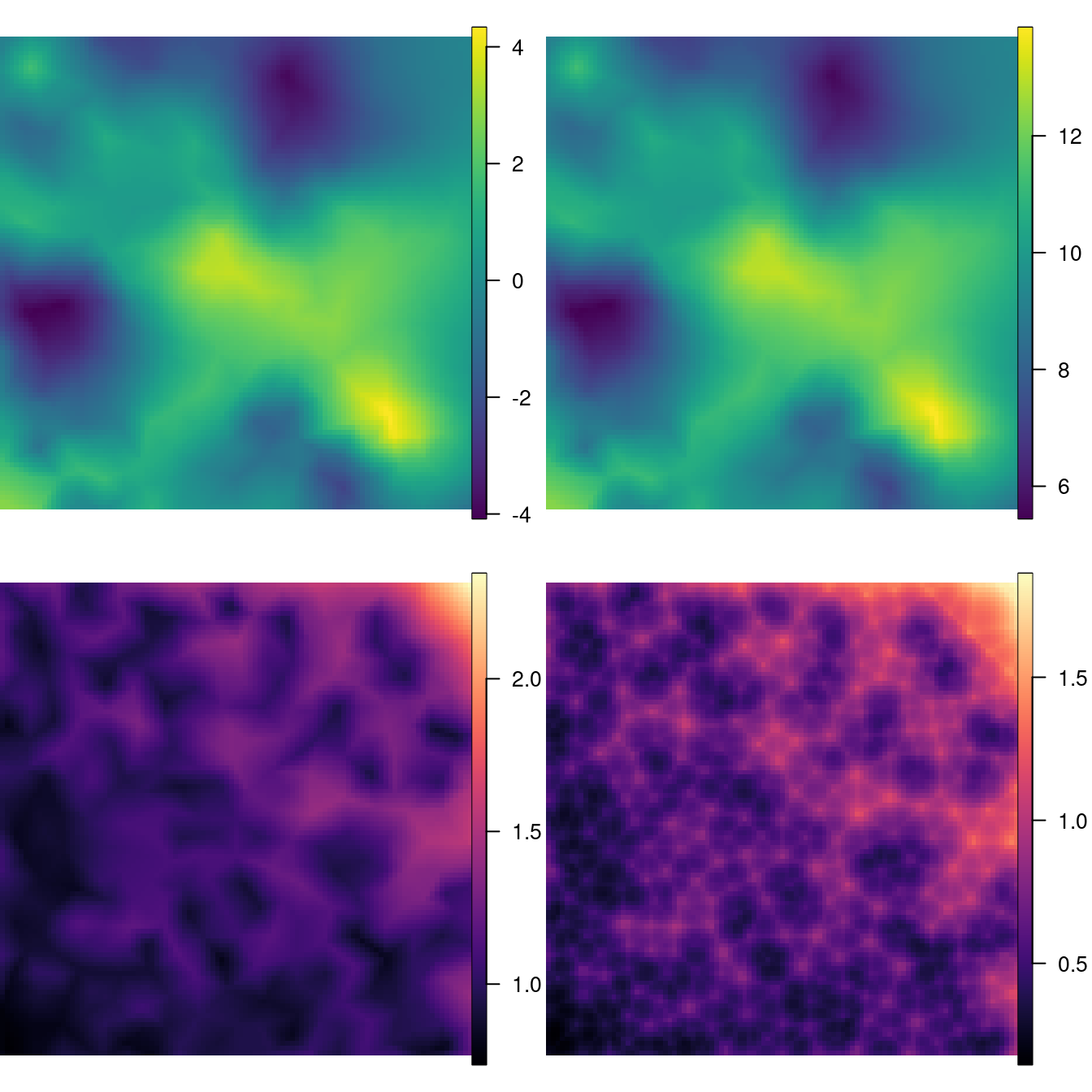

prd0.m <- inla.mesh.project(pgrid0, res5$summary.random$i$mean)

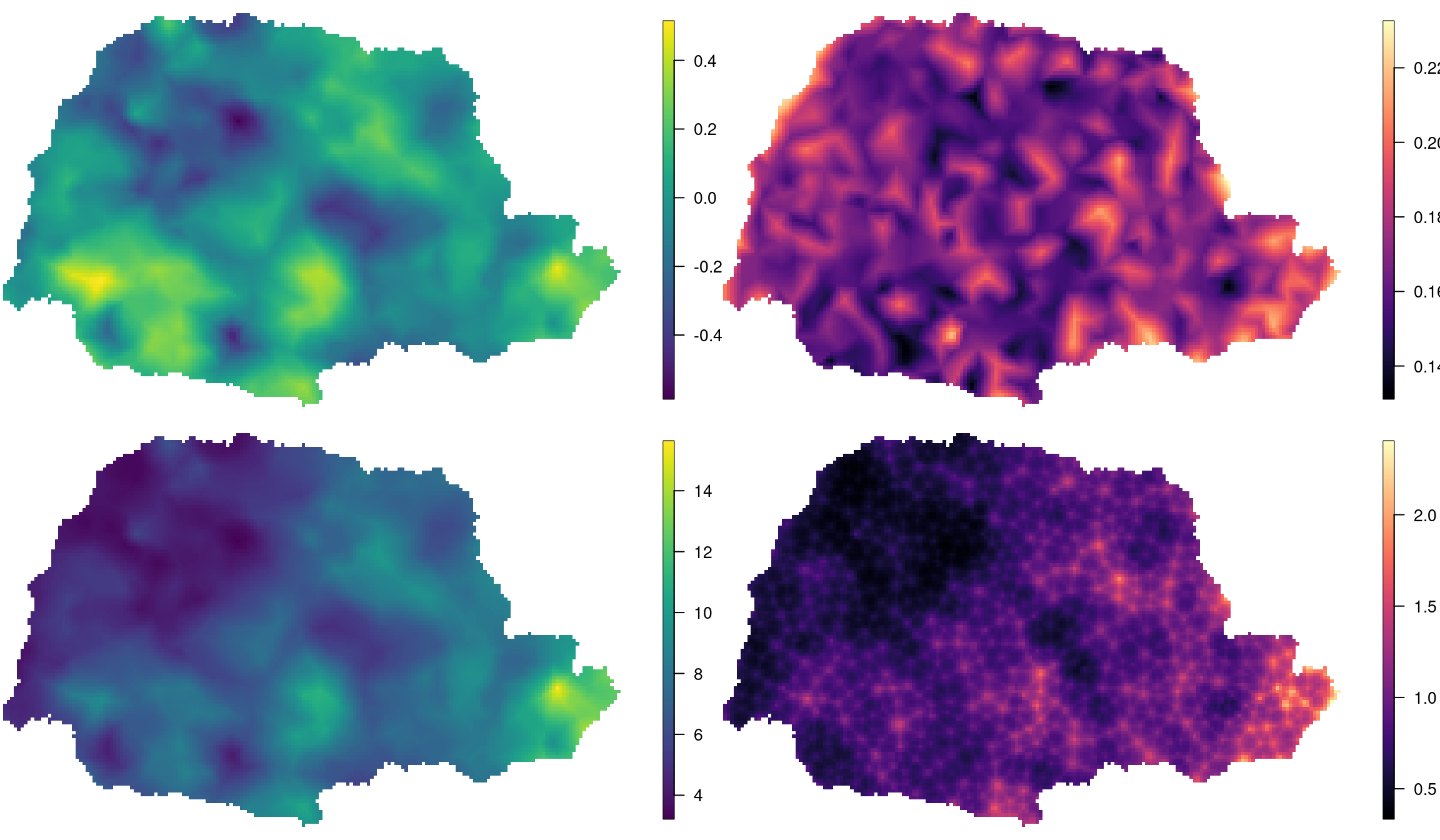

prd0.s <- inla.mesh.project(pgrid0, res5$summary.random$i$sd)The values projected on the grid are available in Figure 2.10.

2.5 Prediction

Another quantity of interest when modeling spatially continuous processes is the prediction of the expected value on a target location for which data have not been observed. In a similar way as in the previous section, it is possible to compute the marginal distribution of the expected value at the target location or to make a projection of some functional of it, specifically, considering that

\[ \mathbf{y} \sim N(\mathbf{\mu} = \mathbf{1}\beta_0 + \mathbf{A} \mathbf{u}, \sigma_e^2\mathbf{I}). \]

We will be computing the posterior distribution of \(\mathbf{\mu}\). This problem is often called prediction.

2.5.1 Joint estimation and prediction

In this case, we just set a scenario for the prediction and include it in the stack used in the model fitting. This is similar to the stack created to predict the random field, but here all the fixed effects have to be considered in the predictor and effects slots of the stack. In our case we just have the intercept:

stk5.pmu <- inla.stack(

data = list(resp = NA),

A = list(A5pts3, 1),

effects = list(i = 1:spde5$n.spde, beta0 = rep(1, 3)),

tag = 'prd5.mu')This stack is then joined to the data stack to fit the model again. Notice we

can save time by considering the previous fitted model parameters here (by

using parameter control.mode):

stk5.full <- inla.stack(stk5, stk5.pmu)

r5pmu <- inla(resp ~ 0 + beta0 + f(i, model = spde5),

data = inla.stack.data(stk5.full),

control.mode = list(theta = res5$mode$theta, restart = FALSE),

control.predictor = list(A = inla.stack.A(

stk5.full), compute = TRUE))The fitted values in an inla object are summarized in a single data.frame

for all the observations in the dataset. In order to find the predicted values

for the values with missing observations, the index to their rows in the

data.frame must be found first. This index can be obtained from the full

stack by indicating the tag in the corresponding stack, as follows:

indd3r <- inla.stack.index(stk5.full, 'prd5.mu')$data

indd3r

## [1] 201 202 203To get the summary of the posterior distributions of \(\mu\)

at the target location’s the index must be passed to the data.frame

with the summary statistics:

r5pmu$summary.fitted.values[indd3r, c(1:3, 5)]

## mean sd 0.025quant 0.975quant

## fitted.APredictor.201 9.674 0.3442 9.001 10.351

## fitted.APredictor.202 12.561 0.6308 11.325 13.804

## fitted.APredictor.203 6.755 0.9970 4.804 8.726Also, the posterior marginal distribution of the predicted values can be obtained:

marg3r <- r5pmu$marginals.fitted.values[indd3r]Finally, a 95% HPD interval for \(\mu\) at the second target location can

be computed with the function inla.hpdmarginal():

inla.hpdmarginal(0.95, marg3r[[2]])

## low high

## level:0.95 11.32 13.8It is possible to see that around point (0.5, 0.5) the values of the response are significantly larger than \(\beta_0\), as seen in Figure 2.10.

2.5.2 Summing the linear predictor components

A computationally cheap approach is to (naïvely) sum the projected posterior means of the terms in the linear regression. For covariates, this is done by considering the posterior means of the covariate coefficients and multiplying them by a covariate scenario, as done in Cameletti et al. (2013). In this toy example, we have only to consider the posterior mean of the intercept and the posterior mean of the random field:

res5$summary.fix[1, "mean"] +

drop(A5pts3 %*% res5$summary.random$i$mean)

## [1] 9.674 12.561 6.756For the standard error, a similar approach is possible. However, it needs to account for the intercept variance and covariance of the two terms in the sum as well.

2.5.3 Prediction on a grid

The computation of all marginal posterior distributions at the points of a grid is computationally expensive. However, complete marginal distributions are seldom used although they are computed by default. Instead, posterior means and standard deviations are enough in most of the cases. As we do not need the entire posterior marginal distribution at each point in the grid, we set an option in the call to stop returning it in the output. This is useful for saving memory when we want to store the object for use in the future.

In the code below, the model is fitted again, considering the mode for all the

hyperparameters in the model fitted previously, but the storage of the marginal

posterior distributions of random effects and posterior predictor values is

disabled (using parameter control.results). The computation of the quantiles

is also disabled, by setting quantiles equal to FALSE. Only the mean and

standard deviation are stored. Furthermore, the projector matrix is the same

that was used in the previous example to project the posterior mean on the

grid. Thus, we will have the mean and standard deviation of the posterior

marginal distribution of \(\mu\) at each point in the grid.

stkgrid <- inla.stack(

data = list(resp = NA),

A = list(pgrid0$proj$A, 1),

effects = list(i = 1:spde5$n.spde, beta0 = rep(1, 101 * 101)),

tag = 'prd.gr')

stk.all <- inla.stack(stk5, stkgrid)

res5g <- inla(resp ~ 0 + beta0 + f(i, model = spde5),

data = inla.stack.data(stk.all),

control.predictor = list(A = inla.stack.A(stk.all),

compute = TRUE),

control.mode=list(theta = res5$mode$theta, restart = FALSE),

quantiles = NULL,

control.results = list(return.marginals.random = FALSE,

return.marginals.predictor = FALSE))Again, the index of the predicted values needs to be obtained:

igr <- inla.stack.index(stk.all, 'prd.gr')$dataThis index can be used to visualize or summarize the predicted values. These predicted values have been plotted together with the prediction of the random field obtained in Section 2.4.1 in Figure 2.10.

Figure 2.10: The mean and standard deviation of the random field (top left and bottom left, respectively) and the mean and standard deviation of the fitted values (top right and bottom right, respectively).

Figure 2.10 shows that there is a variation from -4 to 4 in the spatial effect. Considering also that standard deviations range from about 0.8 to 1.6, spatial dependence is considerable. In addition, the standard deviation of both the random field and \(\mu\) are smaller near corner (0, 0) and larger near corner (1, 1). This is just proportional to the locations density.

The two standard deviation fields are different because one is for the random field only and the other is for the expected value of the outcome, which takes into account the standard deviation of the mean as well.

2.5.4 Results from different meshes

In this section, results for the toy dataset based on the six different meshes

built in Section 2.6 will be compared. To do this comparison, the

posterior marginal distributions of the model parameters will be plotted. The

true values used in the simulation of the toy dataset have been added to the

plots in order to evaluate the impact of the meshes on the results. Also,

maximum likelihood estimates using the geoR package (Ribeiro Jr. and Diggle 2001) have been added.

Six models will be fitted, using each one of the six meshes, and the results are put together in a list using the following code:

lrf <- list()

lres <- list()

l.dat <- list()

l.spde <- list()

l.a <- list()

for (k in 1:6) {

# Create A matrix

l.a[[k]] <- inla.spde.make.A(get(paste0('mesh', k)),

loc = coords)

# Creeate SPDE spatial effect

l.spde[[k]] <- inla.spde2.pcmatern(get(paste0('mesh', k)),

alpha = 2, prior.range = c(0.1, 0.01),

prior.sigma = c(10, 0.01))

# Create list with data

l.dat[[k]] <- list(y = SPDEtoy[,3], i = 1:ncol(l.a[[k]]),

m = rep(1, ncol(l.a[[k]])))

# Fit model

lres[[k]] <- inla(y ~ 0 + m + f(i, model = l.spde[[k]]),

data = l.dat[[k]], control.predictor = list(A = l.a[[k]]))

}Mesh size influences the computational time needed to fit the model. More

nodes in the mesh need more computational time. The times required to fit the

models with INLA for these six meshes are shown in Table

2.1.

| Mesh | Size | Time |

|---|---|---|

| 1 | 2900 | 74.8375 |

| 2 | 488 | 1.3091 |

| 3 | 371 | 0.8305 |

| 4 | 2878 | 43.8911 |

| 5 | 490 | 1.5561 |

| 6 | 375 | 1.1386 |

Furthermore, the posterior marginal distribution of \(\sigma_e^2\) is computed for each fitted model:

s2.marg <- lapply(lres, function(m) {

inla.tmarginal(function(x) exp(-x),

m$internal.marginals.hyperpar[[1]])

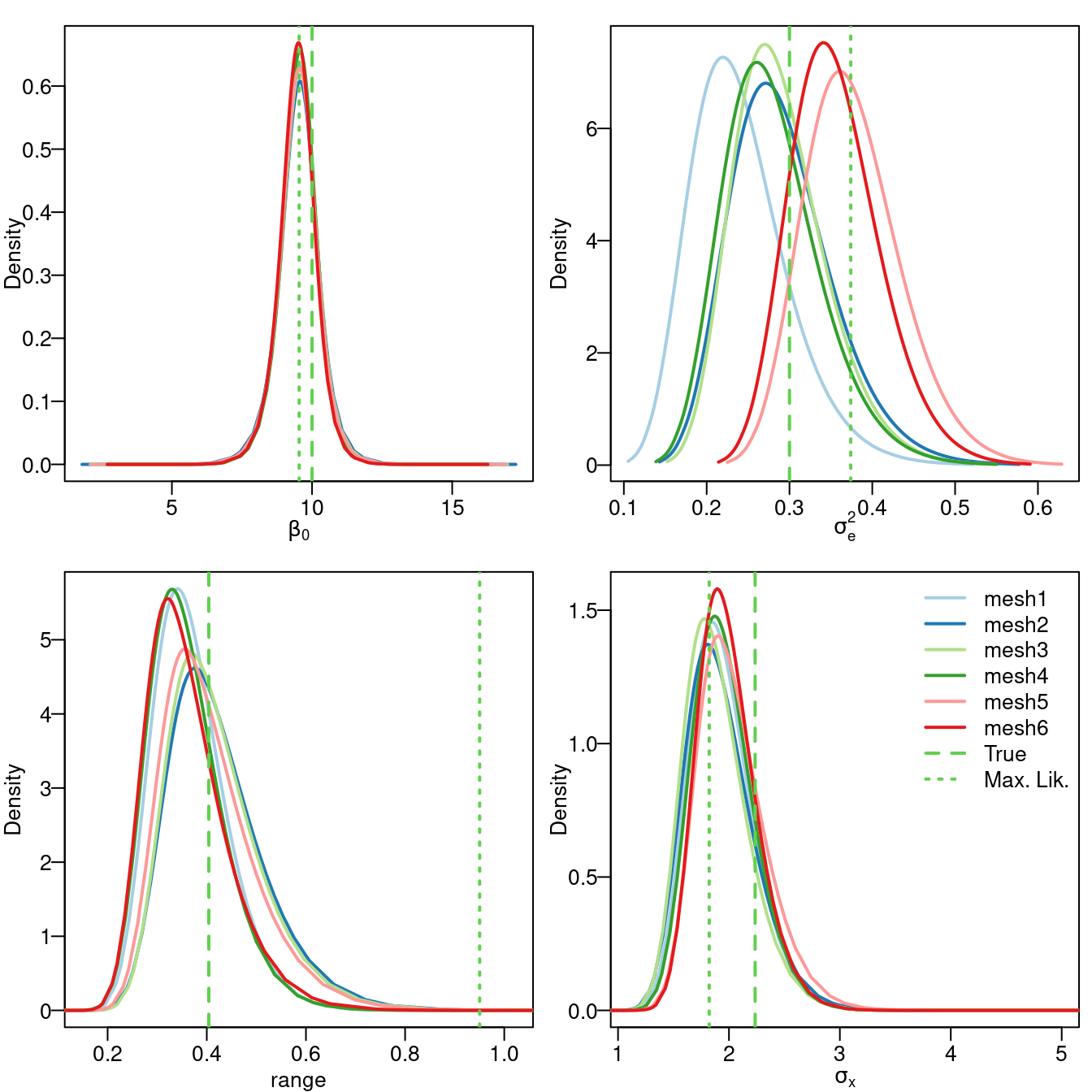

})The true values of the model parameters are: \(\beta_0=10\), \(\sigma_e^2=0.3\), \(\sigma_x^2=5\), range \(\sqrt{8}/7\) and \(\nu=1\). The \(\nu\) parameter is fixed at the true value as a result of setting \(\alpha=2\) in the definition of the SPDE model.

beta0 <- 10

sigma2e <- 0.3

sigma2u <- 5

range <- sqrt(8) / 7

nu <- 1The maximum likelihood estimates obtained with package geoR (Ribeiro Jr. and Diggle 2001) are:

lk.est <- c(beta = 9.54, s2e = 0.374, s2u = 3.32,

range = 0.336 * sqrt(8))The posterior marginal distributions of \(\beta_0\), \(\sigma_e^2\), \(\sigma_x^2\), and range have been plotted in Figure 2.11. Here, it can be seen how the posterior marginal distribution of the intercept has its mode at the likelihood estimate, considering the results from all six meshes.

The main differences can be found in the noise variance \(\sigma_e^2\) (i.e., the nugget effect). The results from the mesh based on the points have a posterior mode which is smaller than the actual value and the maximum likelihood estimate. In general, as the number of triangles in the mesh increase, the posterior mode gets closer to the actual value of the parameter. Regarding the meshes computed from the boundary, they seem to provide posterior modes which are closer to the maximum likelihood estimate than the other meshes.

Regarding the marginal variance of the latent field, \(\sigma_x^2\), all meshes produced results in a posterior smaller than the actual value and closer to the maximum likelihood estimate. For the range, all meshes have a mode smaller than the maximum likelihood estimate and very close to the actual value.

Figure 2.11: Marginal posterior distribution for \(\beta_0\) (top left), \(\sigma_e^2\) (top right), range (bottom left) and \(\sqrt{\sigma_x^2}\) (bottom right).

Although these results are based on a toy example and are not conclusive, a general comment is that it is good to have a mesh tuned about the point locations, to capture noise variance. It is also important to allow for some flexibility to avoid a large variability in the shape and size of the triangles, to get good estimates of the latent field parameters. Thus, it is a tradeoff between (1) point locations and (2) large variability in the shape of the mesh triangles.

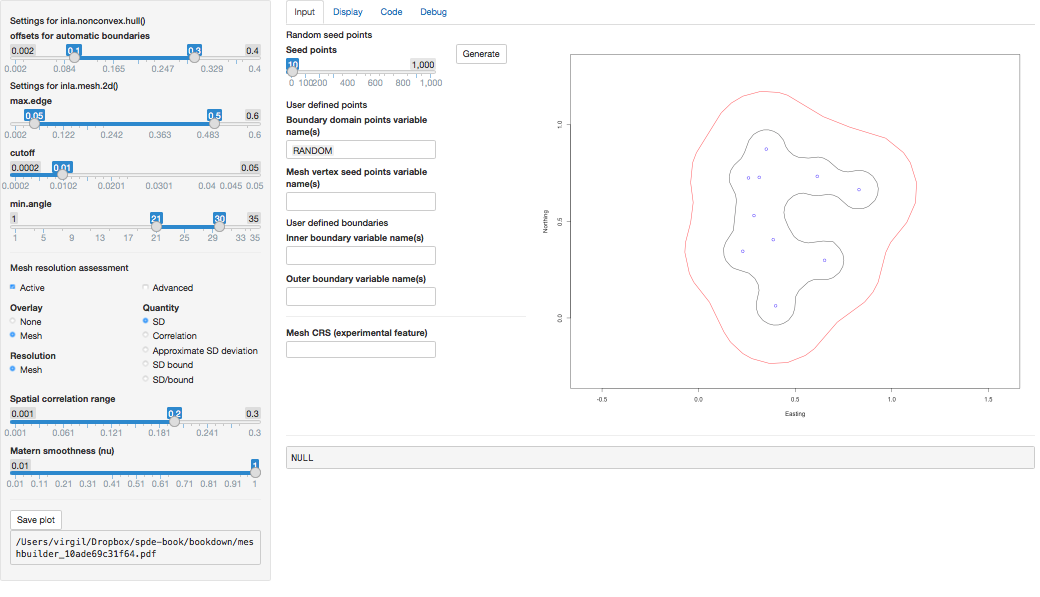

2.6 Triangulation details and examples

As stated earlier in this chapter, the first step to fit an SPDE model is the construction of the mesh to represent the spatial process. This step must be done very carefully, since it is similar to choosing the integration points on a numeric integration algorithm. Should the points be taken at regular intervals? How many points are needed? Furthermore, additional points around the boundary, or outer extension, need to be chosen with care. This is necessary to avoid a boundary effect that may cause a variance twice as larger at the border than within the domain (Lindgren 2012; Lindgren and Rue 2015).

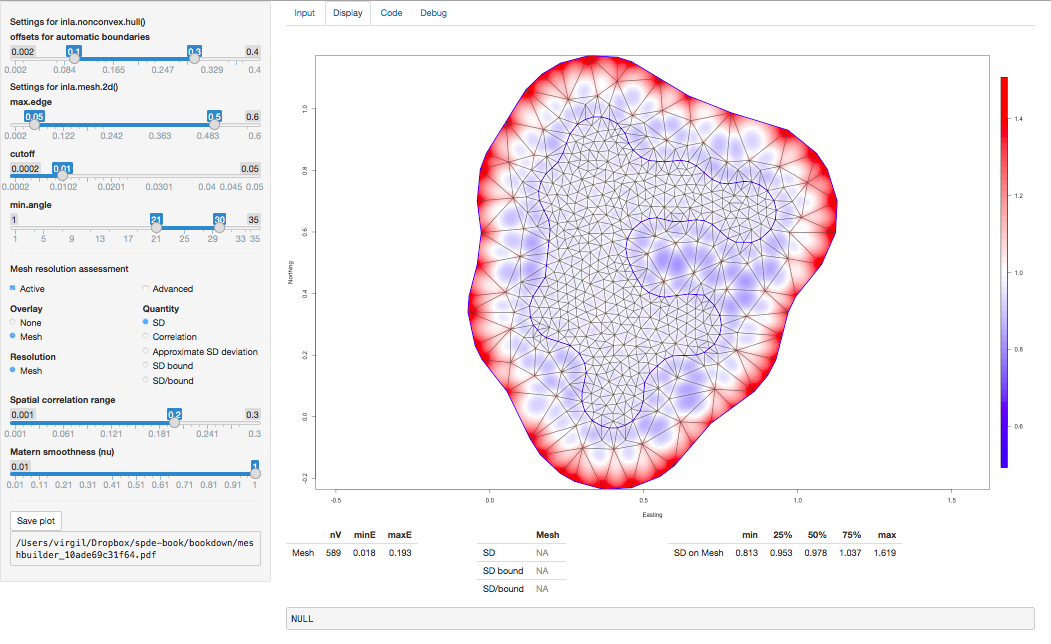

Finally, in order to experiment with mesh building, there is a Shiny

application in the INLA package that can be loaded with meshbuilder().

This will be useful to learn and understand how the different parameters

are used in the definition of the mesh work. This is further described in

Section 2.7.

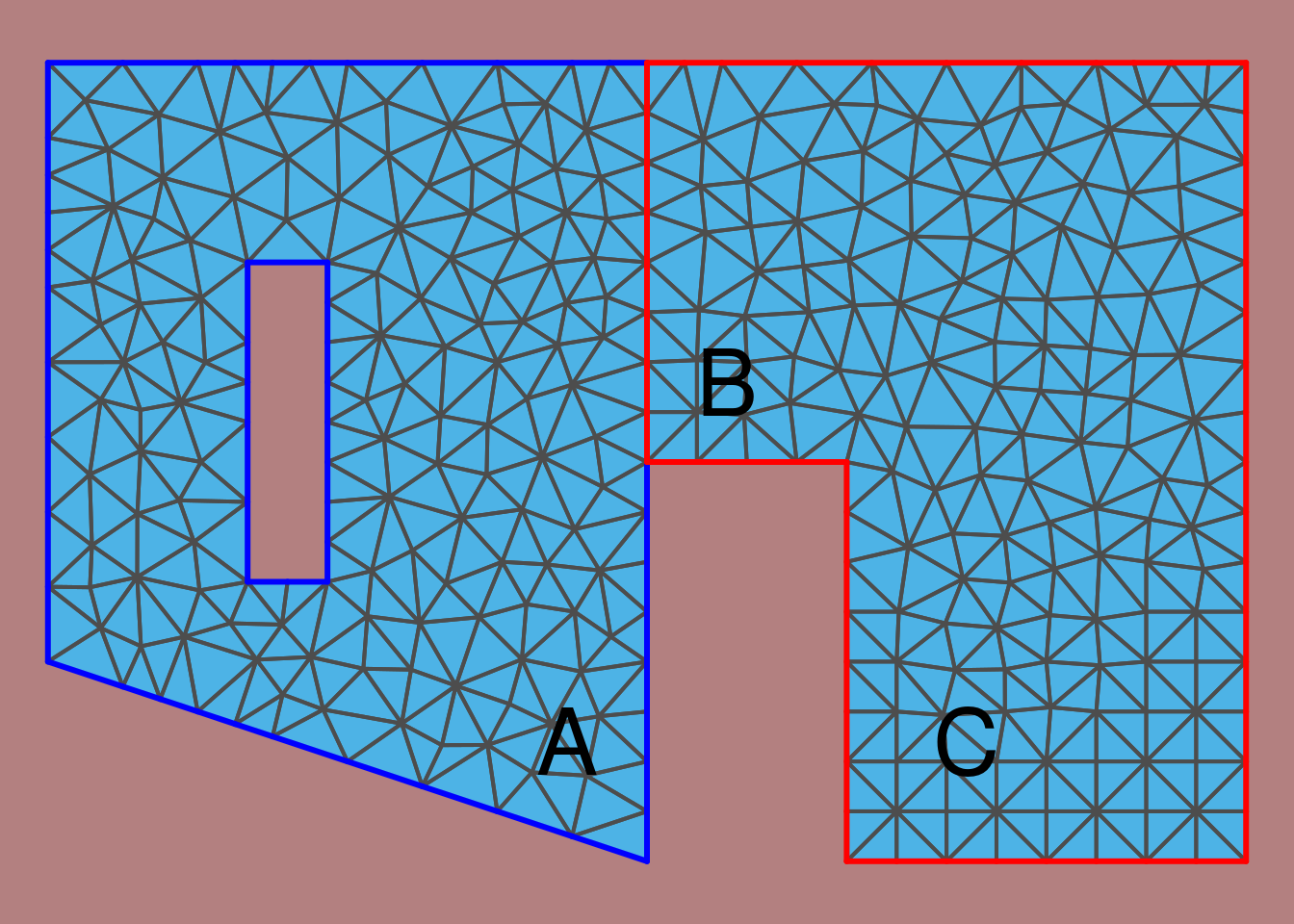

2.6.1 Getting started

For a two dimensional mesh, function inla.mesh.2d() is the one that is

recommended to use for building a mesh. This function creates a Constrained

Refined Delaunay Triangulation (CRDT) over the study region, that will be simply

referred to as the mesh. This function can take several arguments:

str(args(inla.mesh.2d))

## function (loc = NULL, loc.domain = NULL, offset = NULL,

## n = NULL, boundary = NULL, interior = NULL,

## max.edge = NULL, min.angle = NULL, cutoff = 1e-12,

## max.n.strict = NULL, max.n = NULL, plot.delay = NULL,

## crs = NULL)First of all, some information about the study region is needed to create the

mesh. This can be provided by the location points or just a domain. The point

locations, which can be passed to the function in the loc argument, are used

as initial triangulation nodes. A single polygon can be supplied to determine

the domain extent using the loc.domain argument. After the location

points or the boundary have been passed to the function, the algorithm will

find a convex hull mesh. A non-convex hull mesh can be made when a

list of polygons is passed using the boundary argument, where each element of

this list is of the class returned by function inla.mesh.segment(). Hence,

one of these three arguments (loc, domain or boundary) is mandatory.

The other mandatory argument is max.edge. This argument specifies the maximum

allowed triangle edge length in the inner domain and in the outer extension.

So, it can be a single numeric value or length two vector, and it must be in

the same scale units as the coordinates.

The other arguments are used to specify additional constraints on the mesh. The

offset argument is a numeric value (or a length two vector) and it is used to

set the automatic extension distance. If positive, it is the extension

distance in the same scale units. If

negative, it is interpreted as a factor relative to the approximate data

diameter; i.e., a value of -0.10 (the default) will add a 10% of the data

diameter as outer extension.

Argument n is the initial number of points in the extended boundary. The

interior argument is a list of segments to specify interior constraints, each

one of the inla.mesh.segment class. A good mesh needs to have triangles as

regular as possible in size and shape. This can be controlled with argument

max.edge to control the edge length of the triangles and the min.angle

argument (which can be scalar or length two vector) can be used to specify the

minimum internal angles of the triangles in the inner domain and the outer

extension. Values up to 21 guarantee the convergence of the algorithm

(de Berg et al. 2008; Guibas, Knuth, and Sharir 1992).

To further control the shape of the triangles, the cutoff argument can be

used to set the minimum allowed distance between points. It means that points

at a closer distance than the supplied value are replaced by a single vertex.

Hence, it avoids small triangles and must be a positive number, and is critical

when there are some points very close to each other, either for point locations

or in the domain boundary.

To understand how function inla.mesh.2d() works, several meshes have been

computed for different combinations of some of the arguments using the first

five locations of the toy dataset. First, the toy dataset will be loaded and

its first five points taken:

data(SPDEtoy)

coords <- as.matrix(SPDEtoy[, 1:2])

p5 <- coords[1:5, ]As the meshes will be built using the domain and not the points, this domain needs to be defined first:

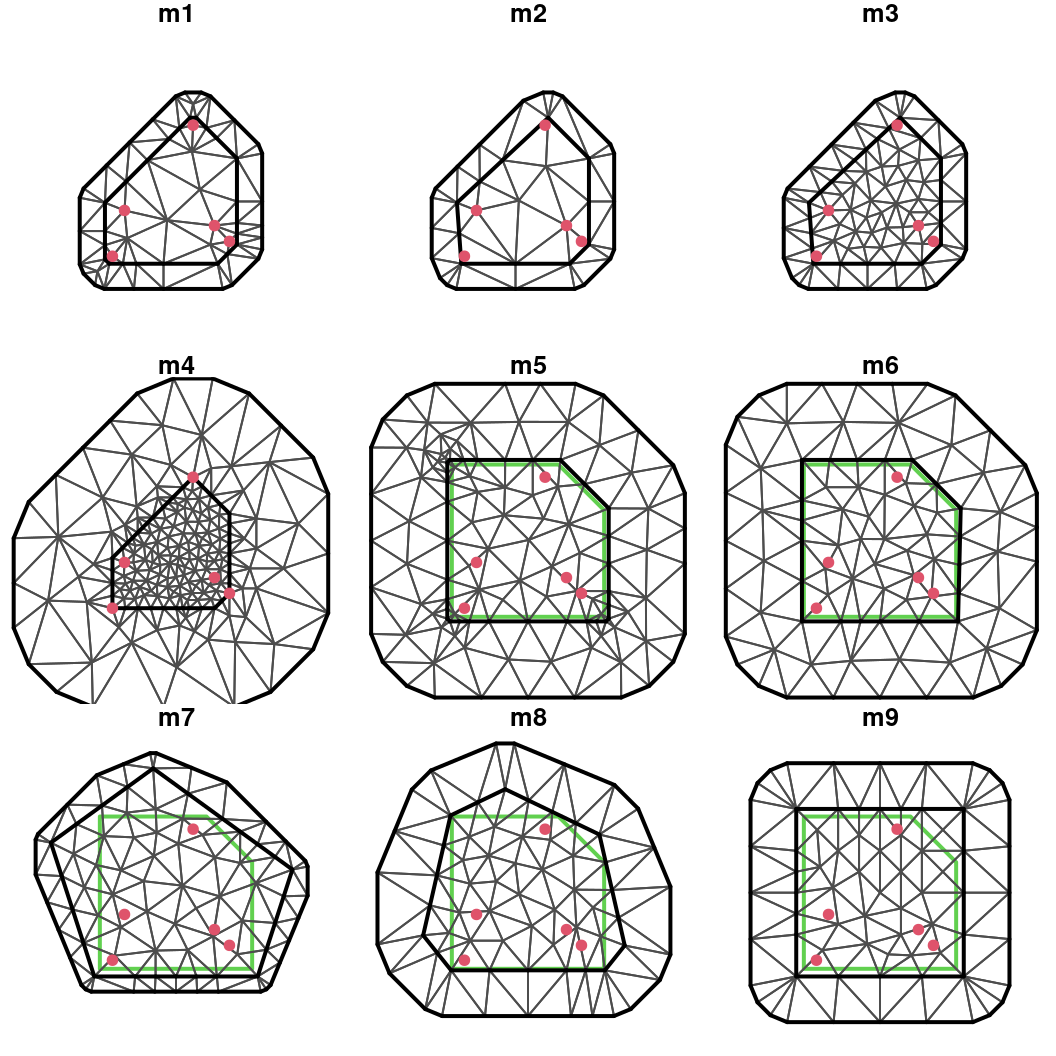

pl.dom <- cbind(c(0, 1, 1, 0.7, 0, 0), c(0, 0, 0.7, 1, 1, 0))This domain has been plotted in green over some of the meshes in Figure 2.12.

Finally, the meshes are created using the first five points or the domain defined above:

m1 <- inla.mesh.2d(p5, max.edge = c(0.5, 0.5))

m2 <- inla.mesh.2d(p5, max.edge = c(0.5, 0.5), cutoff = 0.1)

m3 <- inla.mesh.2d(p5, max.edge = c(0.1, 0.5), cutoff = 0.1)

m4 <- inla.mesh.2d(p5, max.edge = c(0.1, 0.5),

offset = c(0, -0.65))

m5 <- inla.mesh.2d(loc.domain = pl.dom, max.edge = c(0.3, 0.5),

offset = c(0.03, 0.5))

m6 <- inla.mesh.2d(loc.domain = pl.dom, max.edge = c(0.3, 0.5),

offset = c(0.03, 0.5), cutoff = 0.1)

m7 <- inla.mesh.2d(loc.domain = pl.dom, max.edge = c(0.3, 0.5),

n = 5, offset = c(0.05, 0.1))

m8 <- inla.mesh.2d(loc.domain = pl.dom, max.edge = c(0.3, 0.5),

n = 7, offset = c(0.01, 0.3))

m9 <- inla.mesh.2d(loc.domain = pl.dom, max.edge = c(0.3, 0.5),

n = 4, offset = c(0.05, 0.3))

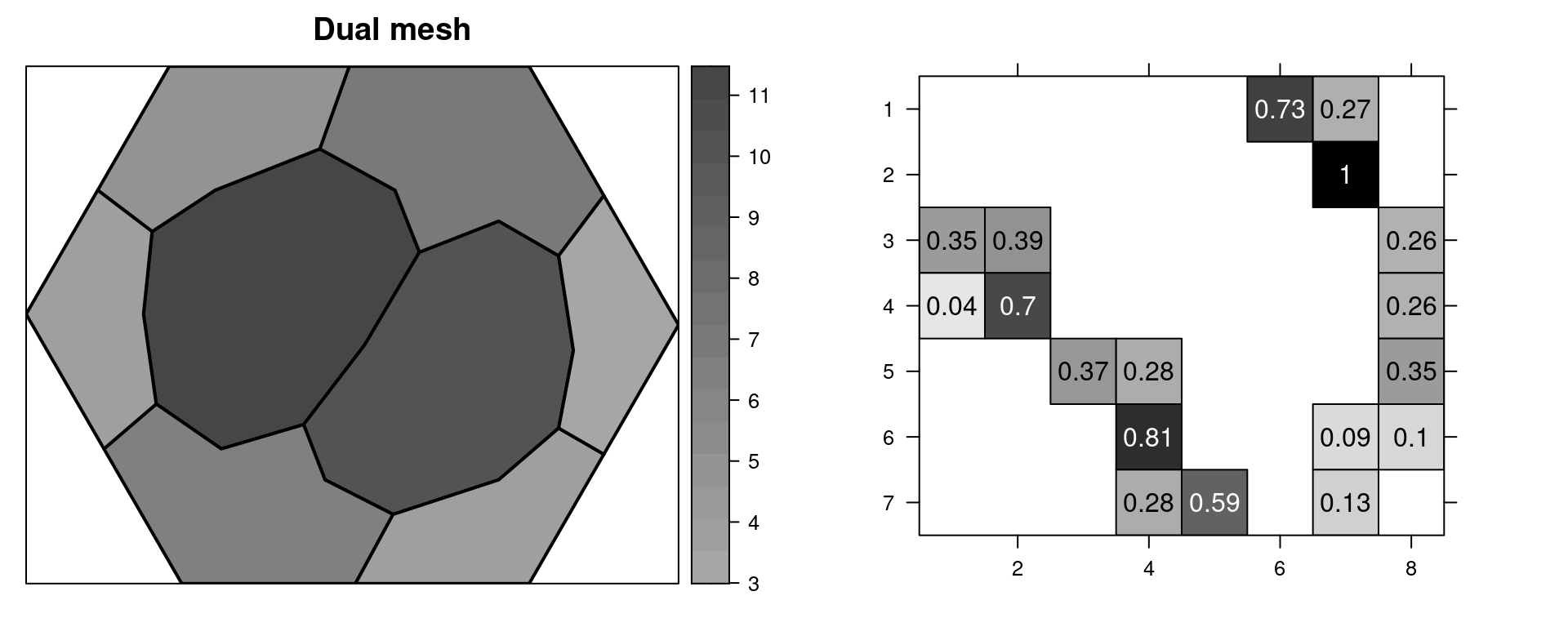

Figure 2.12: Different triangulations created with function inla.mesh.2d() using different combinations of its arguments.

These nine meshes have been displayed in Figure 2.12. In the next paragraphs we provide a comparative critical assessment of the quality of the different meshes depending on their structure.

The m1 mesh has two main problems. First, there are some triangles with

small inner angles and, second, some large triangles appear in the inner

domain. In the m2 mesh, the restriction on the locations is relaxed, because

points with distance less than the cutoff are considered a single vertex. This

avoids some of the triangles (at the bottom right corner) with small angles

that appeared in mesh m1. Hence, using a cutoff is a very good idea. Each

inner triangle in the m3 mesh has an edge length less than 0.1 and this mesh

looks better than the two previous ones.

The m4 mesh has been made without first building a convex hull extension

around the points. It has just the second outer boundary. In this case, the

length of the inner triangles is not good (first value in the max.edge

argument) and there are triangles with edge lengths of up to 0.5 in the outer

region. The shape of the triangles looks good in general.

The m5 mesh was made just using the domain polygon and it has a shape similar

to the domain area. In this mesh there are some small triangles at the corners

of the domain due to the fact that it has been built without specifying a

cutoff. Also, there is a (relatively) small first extension and a

(relatively) large second one. In the m6 mesh, a cutoff has been added and a

better mesh than the previous one has been obtained.

In the last three meshes, the initial number of extension points has been

changed. It can be useful to change this value in some situations to get a

convergence of the mesh building algorithm. For example, Figure

2.12 shows the shape of the mesh obtained with n = 5 in the m7 mesh. This number produces a mesh that seems inadequate for

this domain because there is a non uniform extension behind the border.

Meshes m8 and m9 have very bad triangle shapes.

The object returned by the inla.mesh.2d() function is of class inla.mesh

and it is a list with several elements:

class(m1)

## [1] "inla.mesh"

names(m1)

## [1] "meta" "manifold" "n" "loc" "graph"

## [6] "segm" "idx" "crs"The number of vertices in each mesh is in the n element of this list, which

allows us to compare the number of vertices of all the meshes created:

c(m1$n, m2$n, m3$n, m4$n, m5$n, m6$n, m7$n, m8$n, m9$n)

## [1] 61 36 79 195 117 88 87 69 72The graph element represents the CRDT obtained. In addition, the graph

element contains the matrix that represents the graph of the neighborhood

structure. For example, for mesh m1 this matrix has the following dimension:

dim(m1$graph$vv)

## [1] 61 61The vertices that correspond to the location points are identified in the idx

element:

m1$idx$loc

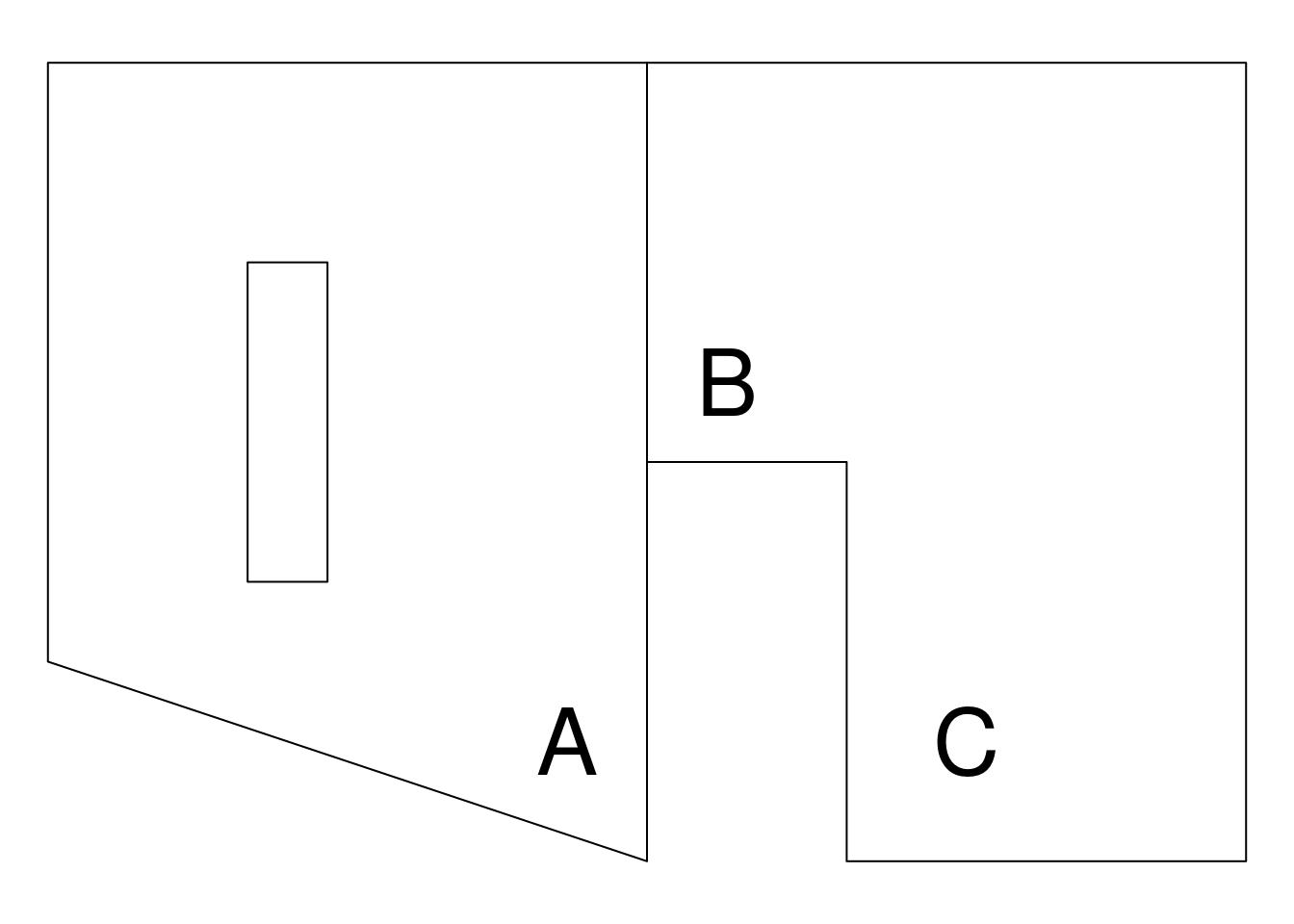

## [1] 24 25 26 27 282.6.2 Non-convex hull meshes

All meshes in Figure 2.12 have been made to have a convex hull

boundary. In this context, a convex hull is a polygon of triangles out of the

domain area, the extension made to avoid the boundary effect. A triangulation

without an additional border can be made by supplying the boundary argument

instead of the location or loc.domain arguments. One way to create a

non-convex hull is to build a boundary for the points and supply it in the

boundary argument.

Boundaries can also be created by using the inla.nonconvex.hull() function,

which takes the following arguments:

str(args(inla.nonconvex.hull))

## function (points, convex = -0.15, concave = convex,

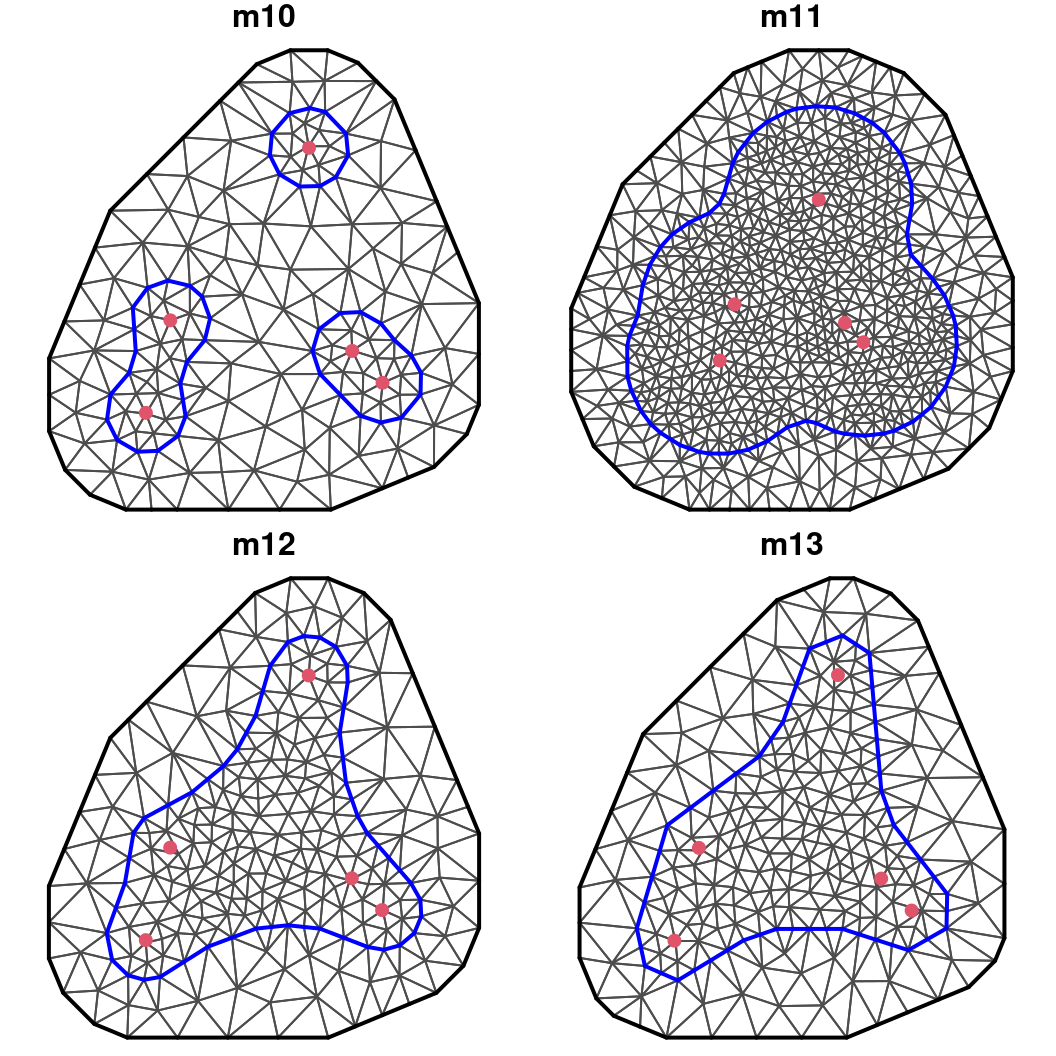

## resolution = 40, eps = NULL, crs = NULL)When using this function, the points and a set of constraints need to be passed. The shape of the boundary can be controlled, including its convexity, concavity and resolution. In the next example, some boundaries are created first and then a mesh is built with each one to better understand how this process works:

# Boundaries

bound1 <- inla.nonconvex.hull(p5)

bound2 <- inla.nonconvex.hull(p5, convex = 0.5, concave = -0.15)

bound3 <- inla.nonconvex.hull(p5, concave = 0.5)

bound4 <- inla.nonconvex.hull(p5, concave = 0.5,

resolution = c(20, 20))

# Meshes

m10 <- inla.mesh.2d(boundary = bound1, cutoff = 0.05,

max.edge = c(0.1, 0.2))

m11 <- inla.mesh.2d(boundary = bound2, cutoff = 0.05,

max.edge = c(0.1, 0.2))

m12 <- inla.mesh.2d(boundary = bound3, cutoff = 0.05,

max.edge = c(0.1, 0.2))

m13 <- inla.mesh.2d(boundary = bound4, cutoff = 0.05,

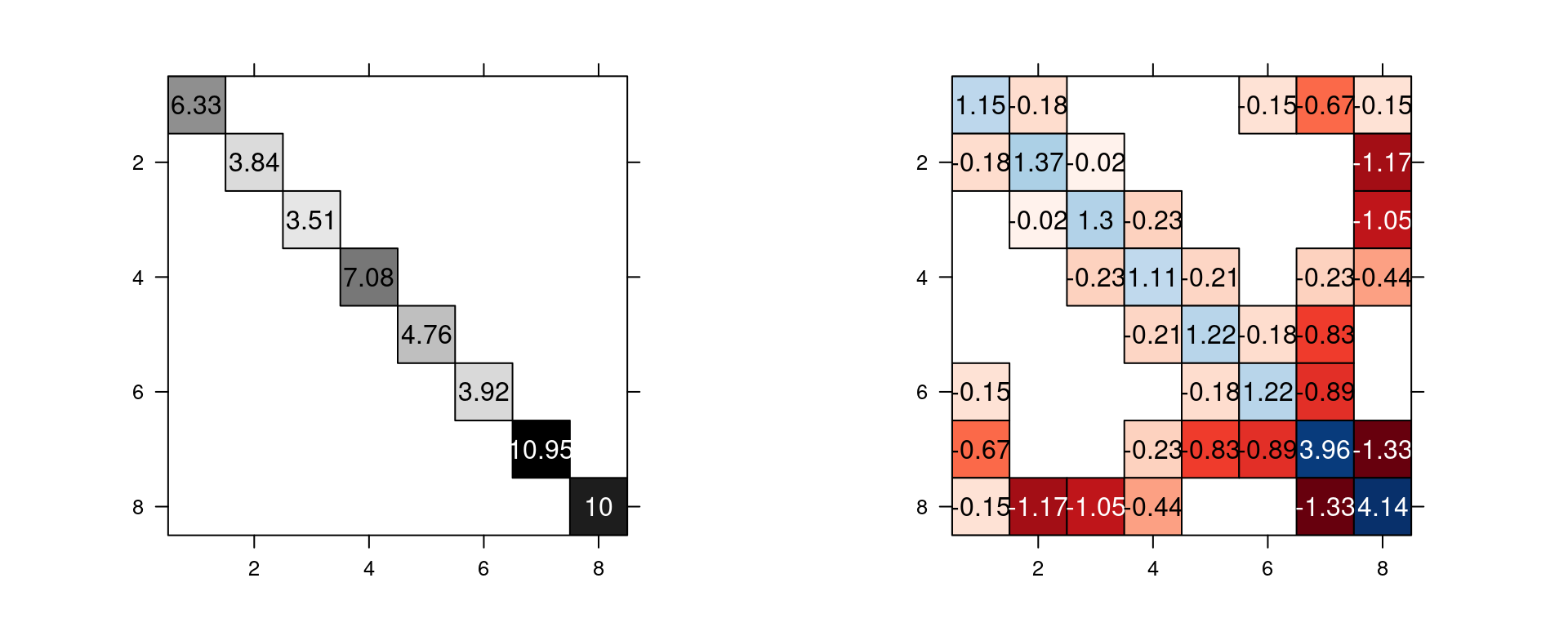

max.edge = c(0.1, 0.2))These meshes have been displayed in Figure 2.13 and a discussion on the quality of the produced meshes is provided below.

Figure 2.13: Non-convex meshes with different boundaries.

The m10 mesh is built with a boundary that uses the default arguments in the

inla.nonconvex.hull() function. The default convex and concave arguments

are both equal to a proportion of 0.15 of the points’ domain radius, that is

computed as follows:

0.15 * max(diff(range(p5[, 1])), diff(range(p5[, 2])))

## [1] 0.1291If we supply a larger value in the convex argument, such as the one used to

generate mesh m11, a larger boundary is obtained. This happens because all

circles with a center at each point and a radius less than the convex value are

inside the boundary. When a larger value for the concave argument is taken,

as in the boundary used for the m12 and m13 meshes, there are no circles

with radius less than the concave value outside the boundary. If a smaller

resolution is chosen, a boundary with small resolution (in terms of number of

points) is obtained. For example, compare the m12 and m13 meshes.

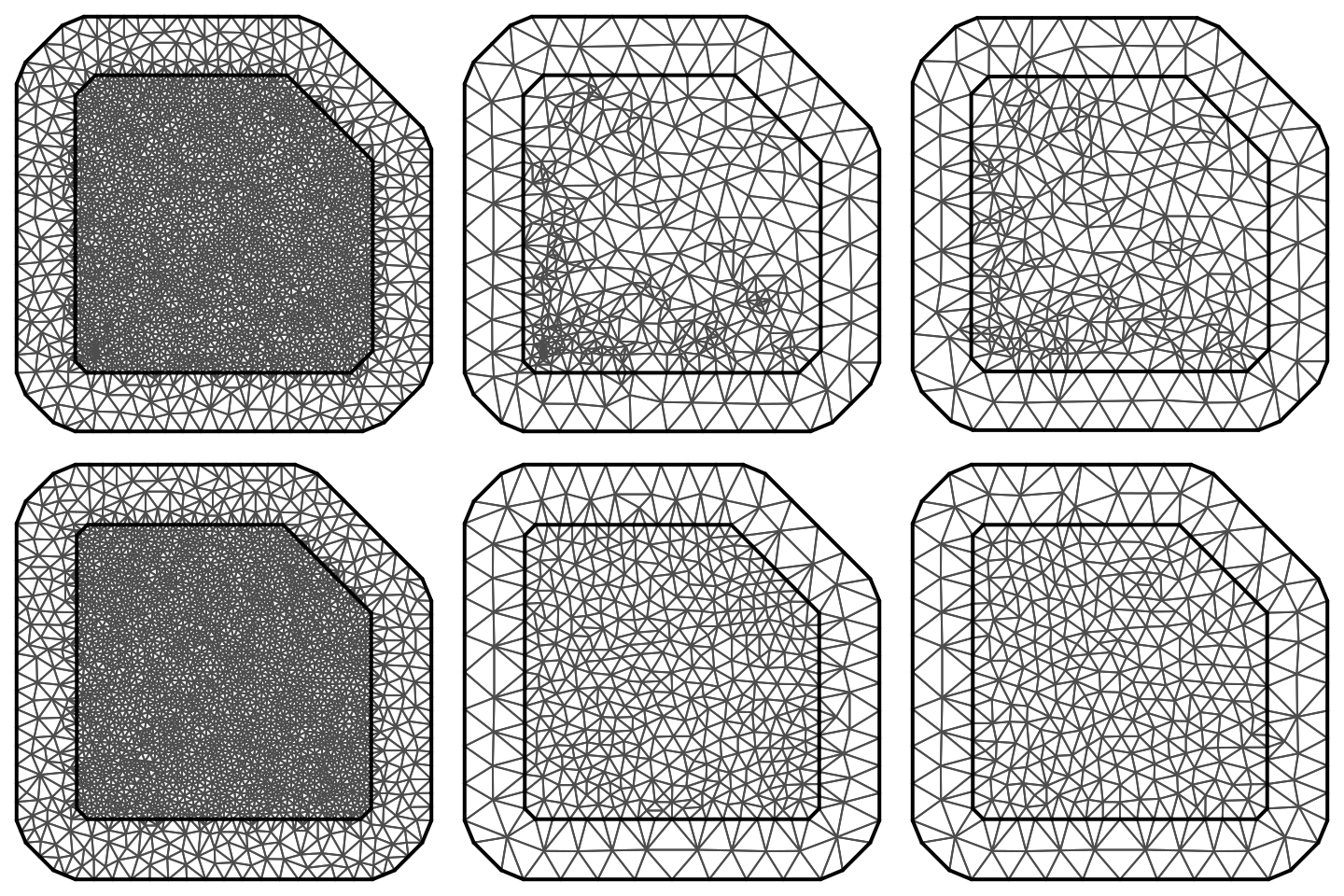

2.6.3 Meshes for the toy example

To analyze the toy data set, six triangulation sets of options were used to make comparisons in Section 2.5.4. The first and second meshes force the location points to be vertices of the mesh, but the maximum length of the edges is allowed to be larger in the second mesh:

mesh1 <- inla.mesh.2d(coords, max.edge = c(0.035, 0.1))

mesh2 <- inla.mesh.2d(coords, max.edge = c(0.15, 0.2)) The third mesh is based on the points, but a cutoff greater than zero is taken to avoid small triangles in regions with a high number of observations:

mesh3 <- inla.mesh.2d(coords, max.edge = c(0.15, 0.2),

cutoff = 0.02)Three other meshes have been created based on the domain area. These are built to have approximately the same number of vertices as the previous ones:

mesh4 <- inla.mesh.2d(loc.domain = pl.dom,

max.edge = c(0.0355, 0.1))

mesh5 <- inla.mesh.2d(loc.domain = pl.dom,

max.edge = c(0.092, 0.2))

mesh6 <- inla.mesh.2d(loc.domain = pl.dom,

max.edge = c(0.11, 0.2))These six meshes are shown in Figure 2.14. The number of nodes in each one of these meshes is:

c(mesh1$n, mesh2$n, mesh3$n, mesh4$n, mesh5$n, mesh6$n)

## [1] 2900 488 371 2878 490 375